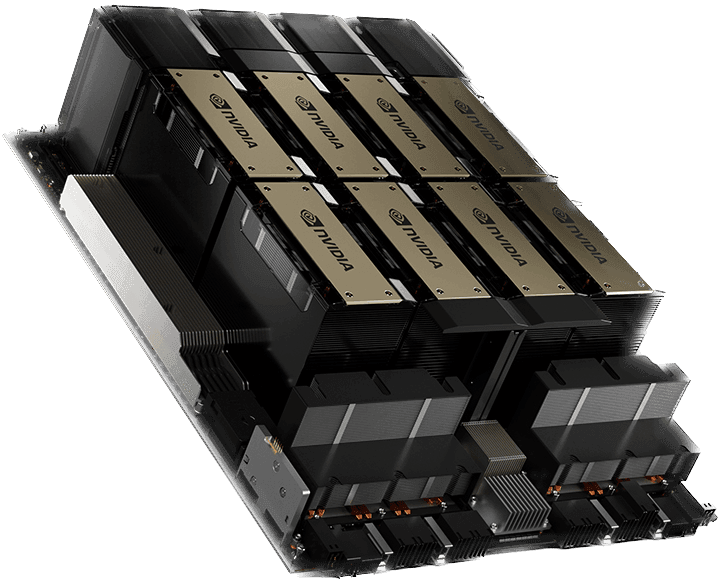

NVIDIA H200

Cutting-edge GPU tailored for breakthrough LLM research, lightning-fast inference applications, and sophisticated mixture-of-experts model innovation.

Built for teams running massive language models with breakthrough speed and efficiency.

Powerful Features to Build & Scale AI applications

Trusted by 1,000+ AI startups, labs and enterprises.

Competitive GPU for AI workloads

Ideal uses cases for the NVIDIA H200 GPU

Learn how NVIDIA H200 delivers exceptional performance for real-time AI inference, advanced deep learning projects, and demanding computational workloads in every field.

AI Inference

Deploy production-ready AI models with NVIDIA H200's optimized inference capabilities. Advanced Tensor Cores and high-bandwidth memory deliver consistent low-latency responses for real-time applications, from conversational AI to computer vision systems serving millions of users.

AI Training

Accelerate model development with H200's powerful training performance. Native FP8 precision and Transformer Engine support enable faster convergence for large language models, while massive memory capacity allows training of previously impossible model architectures.

Research Computing

Advance scientific discovery with H200's computational excellence. From molecular dynamics simulations to climate modeling, researchers leverage breakthrough memory bandwidth and processing power to solve complex problems and accelerate time-to-insight.

Instances That Scale With You

Find the perfect instances for your need. Flexible, transparent, and packed with powerful API to help you scale effortlessly.

| GPU model | GPU | CPU | RAM | VRAM | On-demand Pricing | Reserve pricing |

|---|---|---|---|---|---|---|

| NVIDIA H200 | 1 | 44 | 182 | 141 | $5.99/hr | from $2/hr/GPU |

| NVIDIA H200 | 2 | 88 | 370 | 282 | $11.98/hr | from $2/hr/GPU |

| NVIDIA H200 | 4 | 176 | 740 | 564 | $23.96/hr | from $2/hr/GPU |

| NVIDIA H200 | 8 | 176 | 1450 | 1128 | $47.92/hr | from $2/hr/GPU |