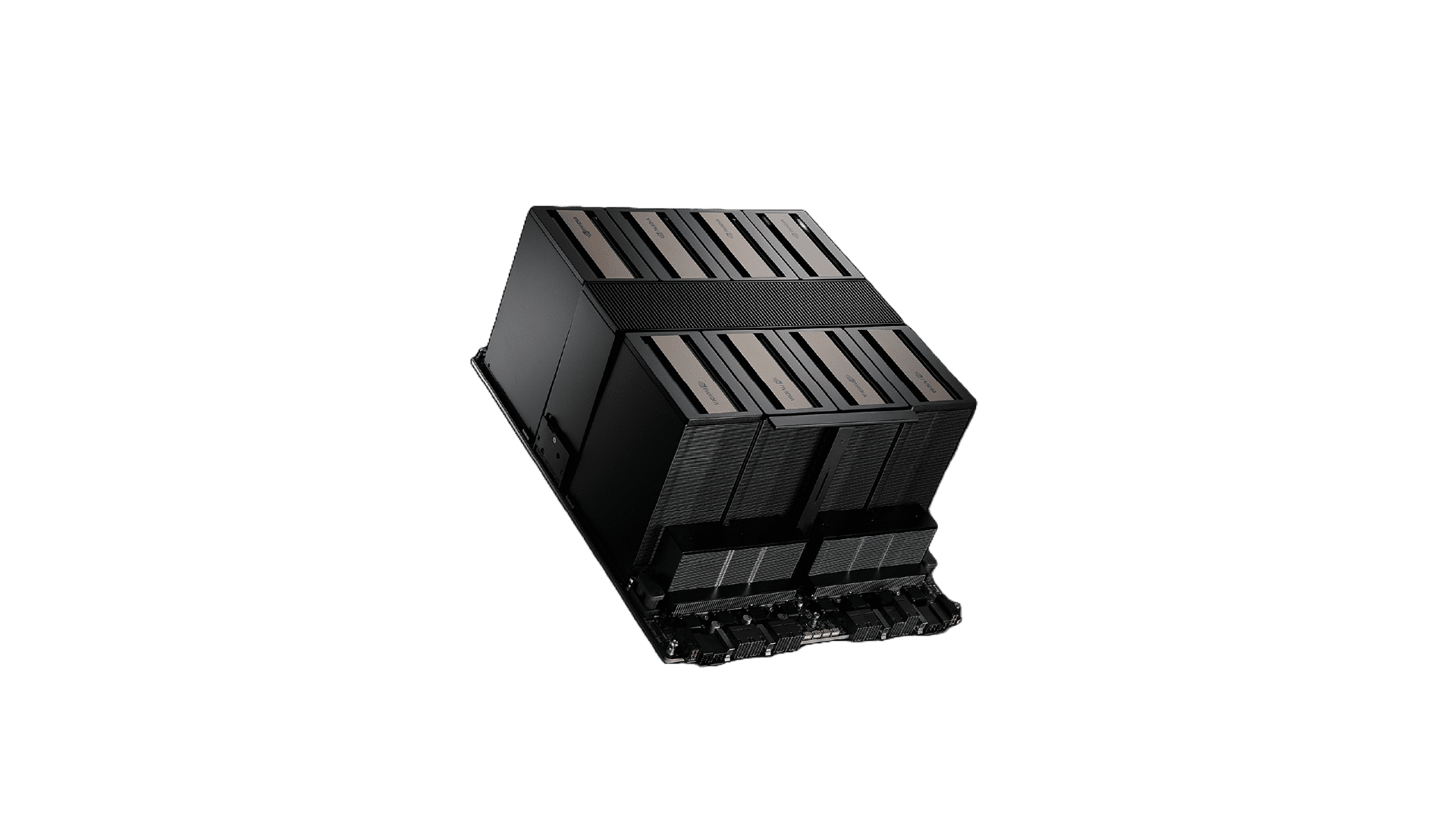

NVIDIA B300

Next-generation clusters optimized for large-scale LLM training, inference, and complex mixture-of-experts model development workflows.

Built for teams running massive language models with breakthrough speed and efficiency.

Powerful Features to Build & Scale AI applications

Trusted by 1,000+ AI startups, labs and enterprises.

Best GPU for any workloads

Ideal uses cases for the NVIDIA B300 GPU

Discover how NVIDIA B300 accelerates AI inference, deep learning workflows, and high-performance computing applications across industries.

AI Inference

AI teams leverage NVIDIA B300 to deliver unprecedented inference performance for large language models and complex multimodal applications. With 18x faster processing and advanced FP4 precision, B300 enables real-time responses for trillion-parameter models at massive scale.

Deep Learning

The NVIDIA B300 revolutionizes deep learning workflows with breakthrough Blackwell Ultra architecture and 288GB HBM3e memory. Data scientists achieve dramatically faster training of foundation models while enabling experimentation with previously impossible model sizes and architectures.

High Performance Computing

From molecular dynamics to climate modeling and computational fluid dynamics, B300 transforms scientific computing with 14.4TB/s NVLink bandwidth and optimized precision formats. Organizations accelerate complex simulations and achieve breakthrough discoveries faster than ever possible.

Instances That Scale With You

Find the perfect instances for your need. Flexible, transparent, and packed with powerful API to help you scale effortlessly.

| GPU model | GPU | CPU | RAM | VRAM | On-demand Pricing | Reserve pricing |

|---|---|---|---|---|---|---|

| NVIDIA B300 | 1 | 30 | 275 | 288 | $9.99/hr | Contact Sales |

| NVIDIA B300 | 2 | 60 | 550 | 576 | $19.98/hr | Contact Sales |

| NVIDIA B300 | 4 | 120 | 1100 | 1152 | $39.96/hr | Contact Sales |

| NVIDIA B300 | 8 | 240 | 2200 | 2304 | $79.92/hr | Contact Sales |