Introduction to Nvidia L40S: Features and Specifications

Introduction

In the realm of modern computing, the emergence and evolution of Graphics Processing Units (GPUs) have ushered in a transformative era. Initially popularized by Nvidia in 1999 with its GeForce 256, GPUs were primarily known for their ability to handle graphics-heavy tasks like rendering 3D spaces. However, their significance has transcended these initial applications, becoming pivotal in fields like artificial intelligence (AI), high-performance computing (HPC), and cloud computing.

The true power of GPUs lies in their ability to perform parallel processing. This capability makes them exponentially faster than traditional Central Processing Units (CPUs) in handling certain tasks. For instance, while a single CPU might take several years to process a large set of high-resolution images, a few GPUs can accomplish this within a day. This efficiency stems from the GPU’s architecture, which allows it to conduct millions of computations simultaneously, particularly beneficial for tasks that involve repetitive calculations.

As technology advanced, the scope of GPU applications expanded significantly. By 2006, Nvidia introduced CUDA (Compute Unified Device Architecture), a parallel computing platform and programming model. This innovation enabled developers to exploit the parallel computation capabilities of Nvidia’s GPUs more efficiently. CUDA allows for the division of complex computational problems into smaller, manageable segments, each of which can be processed independently, enhancing computational efficiency.

The partnership between Nvidia and Red Hat OpenShift further exemplifies the growing significance of GPUs. This collaboration simplified the integration of CUDA with Kubernetes, facilitating the development and deployment of applications. Red Hat OpenShift Data Science (RHODS) further capitalizes on this by simplifying GPU usage for data science workflows, allowing users to customize their GPU requirements for data mining and model processing tasks.

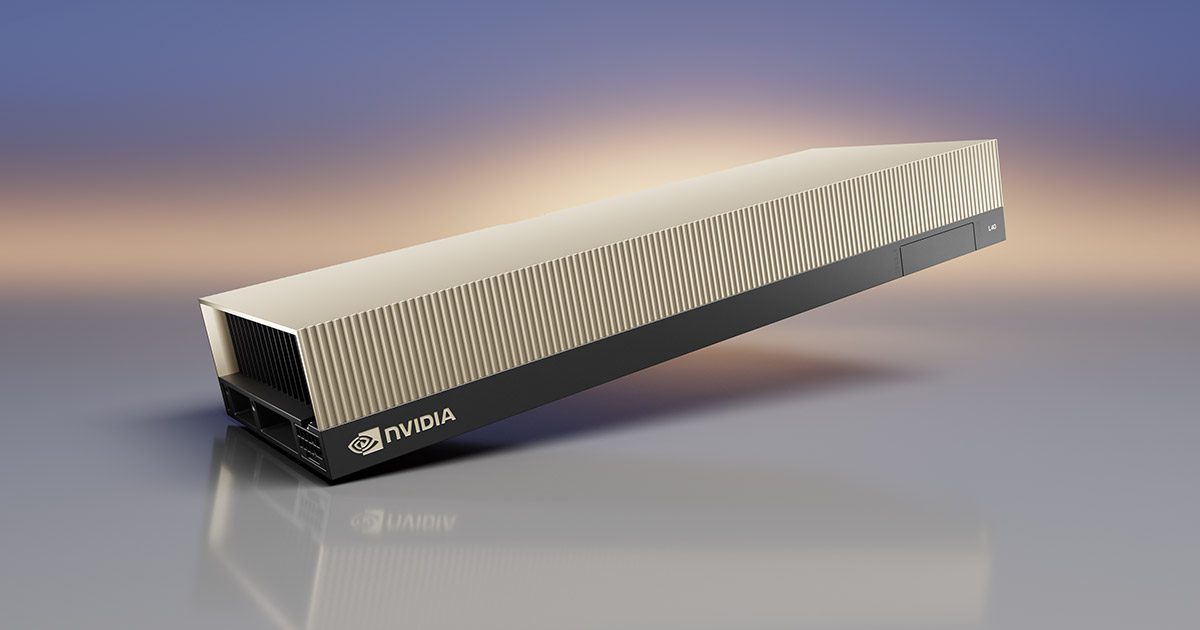

The Nvidia L40S, nestled within this evolving landscape, stands as a testament to the continued innovation and advancement in GPU technology. Its design and capabilities reflect the cumulative knowledge and technological advancements that have been shaping the GPU market. This article delves into the intricate features and specifications of the Nvidia L40S, highlighting its role in the broader context of AI, machine learning, HPC, 3D rendering, and cloud gaming.

Nvidia L40S: An Overview

The unveiling of the Nvidia L40S GPU marked a pivotal moment in the technological landscape, particularly within the realms of AI, high-performance computing, and data center processing. Announced at SIGGRAPH, the L40S is not just another addition to Nvidia’s lineup; it represents a strategic move to cater to the burgeoning demand for high-scale compute resources across various industries. Designed as a universal data center processor, the L40S accelerates some of the most compute-intensive applications, including AI training and inference, 3D design, visualization, and video processing, underlining its versatility and power.

Nvidia’s approach with the L40S is a response to the exponential growth in generative AI, reshaping workflows in diverse sectors such as healthcare, game development, and product design. The GPU, equipped with 48GB of memory and based on the Nvidia Ada Lovelace architecture, features advanced components like fourth-generation Tensor Cores and an FP8 Transformer Engine. This setup enables the L40S to deliver exceptional performance, boasting up to 1.7x training performance and 1.2x generative AI inference performance compared to its predecessors, like the Nvidia A100 Tensor Core GPU. It’s not just about raw power; the L40S is also engineered for high-fidelity professional visualization, offering 212 teraflops of ray-tracing performance and nearly 5x the single-precision floating-point performance of the A100 GPU.

Early adoption of the L40S has been significant, with Arkane Cloud, a specialist in large-scale, GPU-accelerated workloads, being among the first cloud service providers to offer L40S instances. This move by Arkane Cloud underlines the GPU’s potential in tackling complex workloads across various industries, from AI design to interactive video.

Furthermore, the L40S benefits from the backing of the Nvidia AI Enterprise software, offering comprehensive support for over 100 frameworks and tools, making it a robust choice for enterprise applications. Coupled with the updates to the Nvidia Omniverse platform, the L40S is set to be a cornerstone in powering next-gen AI and graphics performance, particularly in generative AI pipelines and Omniverse workloads. The anticipated availability of the L40S, starting in the fall with global system builders like ASUS, Dell Technologies, and Lenovo incorporating it into their offerings, points to a widespread impact across numerous industries.

This strategic positioning of the L40S in Nvidia’s product line not only underscores its technical prowess but also reflects a keen understanding of the evolving demands of modern computing. It’s a GPU designed not just for today’s challenges but also for the unknown possibilities of tomorrow.

The Nvidia L40S, a beacon of technological prowess, is characterized by its remarkable specifications and features. At its core, the L40S utilizes the AD102 graphics processor, marking a significant advancement in GPU architecture. This processor is part of the Tesla Ada generation, succeeding the Tesla Ampere and preceding the Tesla Hopper, indicating its place in the evolutionary timeline of Nvidia’s GPUs.

The technical specifications of the L40S are a testament to its capabilities:

- Graphics Processor and Core Configuration: The AD102 GPU boasts 18,176 shading units, 568 texture mapping units (TMUs), and 192 ROPs. These specifications are crucial for high-end graphics rendering and complex computational tasks.

- Memory Specifications: Equipped with a substantial 48 GB GDDR6 memory and a 384-bit memory bus, the L40S ensures smooth data handling and storage, essential for intensive applications like AI and machine learning.

- Clock Speeds and Performance: The base clock speed of the L40S GPU is 1110 MHz, which can be boosted up to 2520 MHz. Additionally, the memory clock runs at 2250 MHz (18 Gbps effective), facilitating rapid data processing and transfer.

- Architectural Features: The L40S is built on the 5 nm process by TSMC, featuring a die size of 609 mm² and 76,300 million transistors. This high transistor density contributes to its efficiency and computational power.

- Render Configurations: It includes 568 Tensor Cores and 142 Ray Tracing (RT) Cores. The presence of Tensor Cores enhances machine learning applications, while RT Cores are pivotal for advanced graphics rendering.

- Board Design: The L40S is a dual-slot card, measuring 267 mm in length and 111 mm in width. It has a total power draw rated at 300 W and requires a 700 W PSU. For connectivity, it includes 1x HDMI 2.1 and 3x DisplayPort 1.4a outputs.

- Advanced Graphics Features: The GPU supports DirectX 12 Ultimate, OpenGL 4.6, OpenCL 3.0, Vulkan 1.3, CUDA 8.9, and Shader Model 6.7, making it versatile across various platforms and applications.

The Nvidia L40S, with its robust specifications and forward-looking features, stands as a powerhouse in the GPU market, tailored to meet the demands of next-generation computing and AI applications.

Performance and Capabilities of the Nvidia L40S

The Nvidia L40S GPU, poised to make a considerable impact in the domain of artificial intelligence (AI), machine learning (ML), and high-performance computing (HPC), is an embodiment of cutting-edge technology and versatility. Slated for release by the end of 2023, the L40S is expected to set new benchmarks in performance across a variety of demanding applications.

Designed with the Ada Lovelace architecture, the L40S is projected to be the most powerful universal GPU for data centers. Its capabilities are particularly geared towards AI training, Large Language Models (LLMs), and multi-workload environments, highlighting its aptitude for handling complex and data-intensive tasks.

In terms of theoretical performance, the L40S outshines its predecessor, the L40, and is anticipated to be up to four times faster for AI and graphics workloads compared to the previous generation A40 GPU. Its AI training performance is expected to be 1.7 times faster than the A100 GPU, with inference capabilities 1.5 times faster. These improvements are attributed to faster clock speeds and enhanced tensor and graphics rendering performance.

While the theoretical capabilities of the L40S are promising, it’s important to note that its real-world performance is yet to be ascertained. The L40S’s practical performance, particularly in comparison to extensively tested GPUs like the A100 and H100, remains to be seen.

The L40S is designed to be a versatile and user-friendly GPU, capable of handling a diverse range of workloads. Its high computational power makes it well-suited for tasks in AI and ML training, data analytics, and advanced graphics rendering. Industries such as healthcare, automotive, and financial services are poised to benefit significantly from the L40S’s capabilities. Its ease of implementation, unlike other GPUs that may require specialized knowledge or extensive setup, is another feature that stands out, allowing for quick and straightforward integration into existing systems.

Overall, the L40S is tailored for various applications, from AI and machine learning to high-performance computing and data analytics. Its robust feature set makes it a versatile choice for both small and large-scale operations, indicating its potential to become a staple in the world of advanced computing.

AI and Machine Learning Focus of the Nvidia L40S

The Nvidia L40S GPU, heralded as the most powerful universal GPU, is designed to revolutionize AI and machine learning capabilities in data centers. This breakthrough is due to its exceptional combination of AI compute power, advanced graphics, and media acceleration. The L40S is uniquely equipped to handle generative AI, large language model (LLM) inference and training, 3D graphics, rendering, and video, marking it as a versatile powerhouse for a range of data center workloads.

Tensor Performance

The L40S’s tensor performance is measured at a staggering 1,466 TFLOPS. This level of performance is pivotal for AI and machine learning applications, where processing large and complex datasets swiftly and accurately is critical.

RT Core Performance

With 212 TFLOPS in RT Core performance, the L40S is adept at handling tasks that require enhanced throughput and concurrent ray-tracing and shading capabilities. This feature is particularly beneficial for applications in product design, architecture, engineering, and construction, where rendering lifelike designs and animations in real-time is essential.

Single-Precision Performance

The GPU’s single-precision performance stands at 91.6 TFLOPS. This specification indicates its efficiency in workflows that demand accelerated FP32 throughput, such as 3D model development and computer-aided engineering (CAE) simulations. The L40S also supports mixed-precision workloads with its enhanced 16-bit math capabilities (BF16).

Fourth-Generation Tensor Cores

Equipped with fourth-generation Tensor Cores, the L40S offers hardware support for structural sparsity and an optimized TF32 format. These cores provide out-of-the-box performance gains, significantly enhancing AI and data science model training. They also accelerate AI-enhanced graphics capabilities, such as DLSS (Deep Learning Super Sampling), to upscale resolution with better performance in selected applications.

Transformer Engine

The Transformer Engine in the L40S dramatically accelerates AI performance and improves memory utilization for both training and inference. Leveraging the Ada Lovelace architecture and fourth-generation Tensor Cores, this engine scans transformer architecture neural networks and automatically adjusts between FP8 and FP16 precisions. This functionality ensures faster AI performance and expedites training and inference processes.

DLSS 3

NVIDIA DLSS 3 on the L40S enables ultra-fast rendering and smoother frame rates. This innovative frame-generation technology utilizes deep learning and the latest hardware innovations within the Ada Lovelace architecture, including fourth-generation Tensor Cores and an Optical Flow Accelerator. This technology boosts rendering performance, delivers higher frames per second (FPS), and significantly improves latency, enhancing the overall visual experience in AI-driven applications.

The L40S GPU stands as a testament to Nvidia’s commitment to advancing AI and machine learning technologies. Its robust features and specifications not only make it a formidable tool in the arsenal of data centers but also pave the way for future innovations in AI and machine learning applications.

Practical Applications and Use Cases of the Nvidia L40S

Delving into the practical applications and use cases of the Nvidia L40S GPU reveals its diverse capabilities across various industries and tasks. The L40S, with its high computational power and advanced features, is not just a tool for enhancing existing technologies but a catalyst for new possibilities and innovations.

- Cloud Gaming and VR/AR Development: The L40S, with its high tensor and RT Core performance, is ideal for cloud gaming platforms and the development of virtual reality (VR) and augmented reality (AR) applications. Its ability to handle complex graphics rendering and high frame rates makes it a potent tool for creating immersive gaming experiences and realistic virtual environments.

- Scientific Research and Simulations: In the realm of scientific research, the L40S can significantly accelerate simulations and complex calculations. This applies to fields like climate modeling, astrophysics, and biomedical research, where processing large datasets and complex models is crucial.

- Film and Animation Production: The entertainment industry, especially film and animation, can leverage the L40S for rendering high-quality graphics and animations. The GPU’s advanced ray-tracing capabilities enable creators to produce more lifelike and detailed visuals, enhancing the visual storytelling experience.

- Automotive and Aerospace Engineering: In automotive and aerospace engineering, the L40S can be used for simulations and designing advanced models. Its precision and speed in processing complex calculations make it suitable for designing safer and more efficient vehicles and aircraft.

- Financial Modeling and Risk Analysis: The financial sector can utilize the L40S for high-speed data processing in areas like risk analysis and algorithmic trading. Its ability to quickly process large volumes of data can provide insights and forecasts, crucial for making informed financial decisions.

- Healthcare and Medical Imaging: The L40S’s capabilities can be harnessed in healthcare for tasks like medical imaging and diagnostics. Its computational power aids in processing large imaging datasets, potentially leading to faster and more accurate diagnoses.

- AI-Driven Security and Surveillance: In security and surveillance, the L40S can support advanced AI-driven systems for real-time analysis and threat detection, enhancing safety and response measures.

The Nvidia L40S, with its broad spectrum of applications, is set to be a transformative force across industries, driving innovation, efficiency, and advancements in various fields.

Cost and Availability of the Nvidia L40S

The Nvidia L40S, as an emerging leader in the GPU market, presents a compelling blend of cost-effectiveness and availability that is shaping its adoption in various industries. Its pricing strategy and market presence are particularly noteworthy when contrasted with its contemporaries.

- Cost Comparison: The Nvidia H200, a top-tier GPU in Nvidia’s lineup, is priced at approximately $32K and has faced backorders of up to six months. In contrast, the Nvidia L40S emerges as a more affordable alternative. For instance, at the time of writing, the H100 is about 2.6 times more expensive than the L40S. This significant price difference makes the L40S an attractive option for enterprises and AI users requiring powerful compute resources without the hefty price tag.

- Market Availability: The availability of the L40S is another aspect where it stands out. In comparison to the Nvidia H100, which has seen delays and extended waiting times, the L40S is much faster to acquire. This enhanced availability is crucial for businesses and developers who require immediate access to powerful computing resources. The faster availability of the L40S ensures that enterprises can rapidly deploy and integrate these GPUs into their systems, thereby minimizing downtime and accelerating project timelines.

In summary, the Nvidia L40S not only offers cutting-edge technology and performance but also presents a more cost-effective and readily available option compared to other high-end GPUs in the market. Its pricing and availability are set to play a significant role in its adoption across various sectors, offering a balanced solution of advanced capabilities and economic feasibility.

The Future Trajectory of the Nvidia L40S in the GPU Market

As we explore the emerging trends and future possibilities surrounding the Nvidia L40S, it’s clear that this GPU is not just a leap forward in terms of current technology, but a harbinger of future advancements in the GPU market. The L40S, with its powerful blend of capabilities and features, is poised to shape the trajectory of how GPUs are perceived and utilized in various sectors.

- Setting New Standards in GPU Technology: The Nvidia L40S is on the brink of setting new benchmarks in the GPU market. With its advanced capabilities in AI, machine learning, and high-performance computing, it is redefining what is possible with GPU technology. The L40S’s impact is expected to go beyond just performance improvements, influencing how future GPUs are designed and what features they prioritize.

- Catalyst for Next-Gen AI and ML Applications: The L40S is poised to become a catalyst for the development of next-generation AI and ML applications. Its ability to efficiently handle complex tasks and large datasets makes it an ideal tool for pushing the boundaries of AI research and application, paving the way for breakthroughs in fields like autonomous systems, advanced analytics, and intelligent automation.

- Democratization of High-Performance Computing: The L40S stands to play a pivotal role in democratizing high-performance computing. By offering a balance of high power and relative affordability, it enables a wider range of organizations and researchers to access state-of-the-art computing resources. This democratization could lead to a surge in innovation and research across various fields, as more entities gain the ability to tackle complex computational problems.

- Impact on Data Center and Cloud Services: The L40S is expected to significantly impact data center and cloud service operations. Its efficiency and power are ideal for large-scale data center environments, enhancing the capabilities of cloud services in processing, storage, and AI-driven tasks. This could lead to more efficient and powerful cloud computing solutions, benefiting a wide array of industries.

- Influencing Future GPU Developments: The introduction and success of the L40S are likely to influence the direction of future GPU developments. Its design and features may set a precedent that other manufacturers follow, leading to a new generation of GPUs that are more efficient, powerful, and AI-focused.

In conclusion, the Nvidia L40S is not just a new product release; it is a glimpse into the future of GPU technology. Its influence is expected to be far-reaching, impacting everything from AI development to the democratization of high-performance computing. As this technology evolves, it will likely continue to shape the landscape of computing and innovation for years to come.

Jun 14,2024

Jun 14,2024  By Julien Gauthier

By Julien Gauthier