Nvidia H100 price

Introduction to Nvidia H100

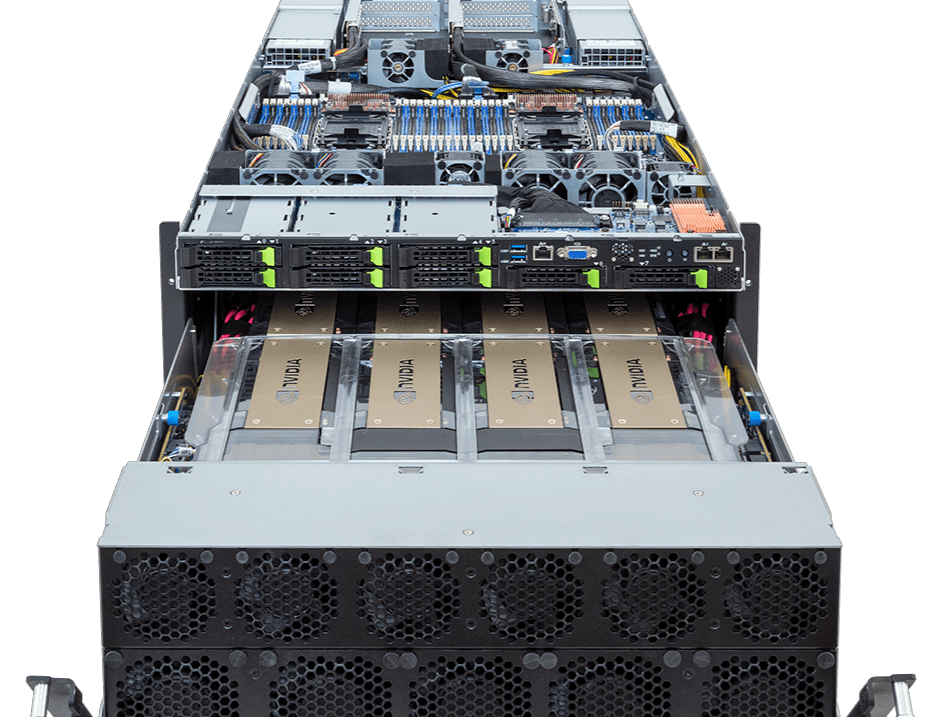

The Nvidia H100 Tensor Core GPU marks a significant leap in accelerated computing, setting new standards for performance, scalability, and security in data centers. This advanced GPU, part of Nvidia’s Hopper architecture, is engineered to handle the most demanding data workloads and AI applications. With its ability to connect up to 256 H100 GPUs via the NVLink® Switch System, it’s uniquely poised to accelerate exascale workloads, including trillion-parameter language models, making it a powerhouse for large-scale AI applications and high-performance computing (HPC).

The H100’s PCIe-based NVL model is particularly notable for its ability to manage large language models (LLMs) up to 175 billion parameters. This is achieved through a combination of its Transformer Engine, NVLink, and 80GB HBM3 memory, offering optimal performance and easy scaling across various data center environments. In practical terms, servers equipped with H100 NVL GPUs can deliver up to 12 times the performance of NVIDIA’s previous DGX™ A100 systems for models like GPT-175B, while maintaining low latency, even in power-constrained settings.

A critical aspect of the H100 is its integration with the NVIDIA AI Enterprise software suite, provided with a five-year subscription. This suite simplifies AI adoption and maximizes performance, ensuring that organizations have access to essential AI frameworks and tools. This integration is key for developing AI-driven workflows, such as chatbots, recommendation engines, and vision AI applications.

The H100’s fourth-generation Tensor Cores and Transformer Engine, featuring FP8 precision, offer up to four times faster training for models like GPT-3 (175B), compared to previous generations. Its high-bandwidth interconnects and networking capabilities, coupled with NVIDIA’s software ecosystem, enable efficient scalability from small enterprise systems to extensive, unified GPU clusters.

In the realm of AI inference, the H100 extends NVIDIA’s leadership with advancements that enhance inference speeds by up to 30 times, significantly reducing latency. This advancement is crucial for maintaining accuracy in large language models while optimizing memory usage and overall performance.

The H100 also stands out in its ability to triple the floating-point operations per second (FLOPS) of double-precision Tensor Cores, delivering exceptional computational power for high-performance computing applications. It can achieve one petaflop of throughput for single-precision matrix-multiply operations without any code changes, demonstrating its capability in AI-fused HPC applications.

For data analytics, a primary time-consumer in AI application development, the H100 offers a solution with its accelerated servers. These servers can manage vast datasets with high performance and scalability, thanks to their substantial memory bandwidth and interconnect technologies. This capability is further enhanced by NVIDIA’s comprehensive software ecosystem, including Quantum-2 InfiniBand and GPU-accelerated Spark 3.0.

Additionally, the H100 incorporates second-generation Multi-Instance GPU (MIG) technology, which maximizes GPU utilization by partitioning it into multiple instances. This feature, combined with confidential computing support, makes the H100 ideal for multi-tenant cloud service provider environments, ensuring secure, efficient utilization of resources.

NVIDIA has also integrated Confidential Computing into the Hopper architecture, making the H100 the first accelerator with such capabilities. This feature allows users to protect the confidentiality and integrity of their data and applications while benefiting from the H100’s acceleration capabilities. It creates a trusted execution environment that secures workloads running on the GPU, ensuring data security in compute-intensive applications like AI and HPC.

Moreover, the H100 CNX uniquely combines the power of the H100 with advanced networking capabilities. This convergence is critical for managing GPU-intensive workloads, including distributed AI training in enterprise data centers and 5G processing at the edge, delivering unparalleled performance in these applications.

Finally, the Hopper Tensor Core GPU, including the H100, will power the NVIDIA Grace Hopper CPU+GPU architecture, designed for terabyte-scale accelerated computing. This architecture provides significantly higher performance for large-model AI and HPC applications, demonstrating NVIDIA’s commitment to pushing the boundaries of computing power and efficiency.

Current Pricing Landscape

The current market dynamics of Nvidia’s H100 GPUs offer a fascinating insight into the evolving landscape of high-performance computing and AI infrastructure. Recent trends indicate an increasing availability of these GPUs, as numerous companies have reported receiving thousands of H100 units. This surge in supply is gradually transforming the cost structure associated with H100 GPU computing. For instance, datacenter providers and former Bitcoin mining companies are now able to offer H100 GPU computing services at considerably lower prices compared to large cloud providers, which traditionally charged a premium for VMs accelerated by H100 GPUs.

Amazon’s move to accept reservations for H100 GPUs, ranging from one to 14 days, is a strategic response to anticipated surges in demand. This decision not only underscores the growing interest in these GPUs but also hints at efforts to normalize supply chains, which in turn could make AI more accessible to a broader range of companies.

Nvidia has been carefully managing the distribution of H100 GPUs, focusing on customers with significant AI models, robust infrastructure, and the financial means to invest in advanced computing resources. This selective approach has been crucial in ensuring that these powerful GPUs are utilized effectively across various sectors. Notably, companies like Tesla have been prioritized due to their well-defined AI models and substantial investment capabilities.

In a significant move, datacenter provider Applied Digital acquired 34,000 H100 GPUs, with a plan to deploy a large portion of these by April and additional units subsequently. This purchase not only demonstrates the immense scale at which companies are investing in AI and high-performance computing but also reflects the growing demand for Nvidia’s H100 GPUs in sophisticated data center environments.

The trend towards using H100 GPUs as collateral for securing financing in the tech sector further emphasizes their value beyond mere computing power. Companies like Crusoe Energy and CoreWeave have secured significant funding using H100 GPUs as collateral, indicating the high market value and trust placed in these GPUs.

Looking forward, the market dynamics for Nvidia’s H100 GPUs are poised for interesting shifts. Factors such as the U.S. government’s restrictions on GPU shipments to Chinese companies could potentially free up more manufacturing capacity for H100 chips, potentially impacting their availability and pricing in the U.S. and other markets.

Global Pricing Variations

The Nvidia H100 GPU, heralded as the most powerful in the market, exhibits significant pricing variations across the globe, influenced by factors such as local currencies, regional demand, and supply chain dynamics. In Japan, for example, Nvidia’s official sales partner, GDEP Advance, raised the catalog price of the H100 GPU by 16% in September 2023, setting it at approximately 5.44 million yen ($36,300). This increase reflects not only the high demand for the chip in AI and generative AI development but also the impact of currency fluctuations. The weakening yen, losing about 20% of its value against the US dollar in the past year, has compounded the cost for Japanese companies, who now have to pay more to purchase these GPUs from the US.

In contrast, in the United States, the H100 GPU’s price tends to be more stable, with an average retail price of around $30,000 for an H100 PCIe card. This price, however, can vary significantly based on the purchase volume and packaging. For instance, large-scale purchases by technology companies and educational institutions can lead to noticeable price differences. Such variations are a testament to Nvidia’s robust market positioning, allowing for flexibility in pricing strategies.

Saudi Arabia and the United Arab Emirates (UAE) are also notable players in the H100 market, having purchased thousands of these GPUs. The UAE, which has developed its open-source large language model using Nvidia’s A100 GPUs, is expanding its infrastructure with the H100. This purchase decision underscores the growing global interest in advanced AI and computing capabilities.

The pricing dynamics in different regions are not just a reflection of supply and demand but also an indicator of the strategic importance of advanced computing technologies. In regions like Japan, where the cost of H100 GPUs has surged, it adds a significant burden to companies already grappling with high expenses in developing generative AI products and services. Such disparities in pricing can potentially influence the competitive landscape in the global AI market, as companies in higher-cost regions may find it challenging to keep pace with their counterparts in regions where these critical resources are more affordable.

Market Demand and Supply Dynamics

The Nvidia H100 GPU, a cornerstone of contemporary AI and high-performance computing, has sparked a significant shift in the market dynamics of GPU computing. This transformation is characterized by an increased supply meeting the rising demand from diverse sectors.

A significant number of companies, both large and small, have reported receiving thousands of H100 GPUs in recent months. This surge in availability is reducing the previously long waiting times for accessing these powerful GPUs in the cloud. Datacenter providers and companies transitioning from Bitcoin mining to AI computing are opening new facilities equipped with H100 GPUs. They offer computing power at more competitive rates than larger cloud providers, who traditionally charged a premium for VMs accelerated by H100 GPUs.

Amazon’s initiative to take reservations for H100 GPUs for short-term use reflects a strategic approach to managing future demand surges. This development is crucial in helping companies execute AI strategies that were previously hindered by GPU shortages.

High-profile companies like Tesla have been among the early adopters of the H100. Tesla’s deployment of a large cluster of these GPUs underscores the significant role they play in advancing AI capabilities, particularly in areas like autonomous vehicle development.

Nvidia has adopted a strategic rationing approach for the H100 GPUs, focusing on customers with substantial AI models and infrastructure. This selective distribution has been necessary due to the intense demand and limited supply of these high-end GPUs.

Large orders, such as Applied Digital’s purchase of 34,000 H100 GPUs, highlight the scale at which businesses are investing in AI and computing infrastructure. This trend is set to continue with more companies seeking to enhance their AI capabilities through advanced GPU computing.

Interestingly, diverse sectors are now venturing into AI computing, utilizing H100 GPUs. For instance, Iris Energy, a former cryptocurrency miner, is transforming its operations to focus on generative AI, leveraging the power of H100 GPUs. This shift indicates the broadening application of GPUs beyond traditional computing tasks.

Voltage Park’s acquisition of a large supply of H100 GPUs and its innovative approach to making GPU computing capacity accessible through the FLOP Auction initiative demonstrate the evolving business models around GPU utilization. This model allows for more flexible and market-driven access to GPU resources.

The H100 GPUs are also becoming valuable assets, used as collateral for significant financing deals in the tech sector. This trend reflects the intrinsic value attached to these GPUs in the current market.

Nvidia’s partnership with cloud providers to expand H100 capacity further diversifies the availability of these GPUs. Large cloud service providers like Oracle, Google, and Microsoft have enhanced their services with H100 GPUs, integrating them into their supercomputing and AI offerings. This integration is a testament to the pivotal role of GPUs in driving the next generation of cloud and AI services.

In summary, the market dynamics around Nvidia’s H100 GPUs are characterized by increased supply, diverse applications, innovative business models, and strategic partnerships, all of which are reshaping the landscape of AI and high-performance computing.

Impact of Increasing GPU Capacity on Pricing

The evolving landscape of Nvidia H100 GPU capacity and its implications on pricing dynamics is a multi-faceted narrative in the world of high-performance computing and AI.

The increasing availability of H100 GPUs, as reported by a wide range of companies, signals a shift towards shorter wait times for cloud access to these GPUs. This development is particularly significant as datacenter providers and companies transitioning from cryptocurrency to AI computing are offering H100 GPU computing at costs lower than those of large cloud providers. This democratization of access is reshaping the economic landscape of GPU computing, making it more accessible and affordable.

Amazon’s initiative to take reservations for H100 GPUs indicates a strategic response to anticipated demand surges, reflecting the broader trend of normalizing GPU supply. This strategy is pivotal in enabling companies to actualize their AI ambitions, which were previously hampered by GPU scarcity.

High-profile adoptions, such as Tesla’s activation of a 10,000 H100 GPU cluster, underscore the critical role of these GPUs in cutting-edge AI applications. Such large-scale deployments also reflect the growing demand and reliance on advanced GPU capabilities in sectors like autonomous driving.

Nvidia’s rationing of H100 GPUs due to shortages and prioritizing customers based on their AI model size, infrastructure, and profile illustrates a targeted approach to distribution. This strategy ensures that these powerful GPUs are allocated to applications where they can be most effectively utilized.

Large-scale purchases, such as Applied Digital’s order of 34,000 H100 GPUs, indicate the scale of investment in AI and computing infrastructure, further driving up demand. Such massive deployments also point to the growing importance of GPU computing in high-performance data centers.

The wide-ranging applications of H100 GPUs across various sectors, including startups like Voltage Park and companies transitioning from other industries like Iris Energy, indicate the broadening appeal and utility of these GPUs. These diverse applications also contribute to the changing pricing dynamics as the market adjusts to varied demand.

Innovative financing models, where H100 GPUs are used as collateral, demonstrate the high value placed on these GPUs in the market. This trend not only signifies the intrinsic worth of these GPUs but also the evolving business models around GPU utilization.

The shortage of CoWoS packaging, essential for GPU manufacturing, has been a bottleneck in the supply chain. However, recent geopolitical developments, like U.S. restrictions on GPU shipments to Chinese companies, could unexpectedly ease these shortages and boost H100 production, potentially impacting the pricing and availability in various markets.

Cloud providers, including Oracle, Google, and Microsoft, expanding their H100 capacity through rental models and cloud services, are reshaping the market. These developments not only enhance access to advanced computing resources but also influence the pricing models in the cloud computing sector.

The narrative around the increasing capacity of Nvidia’s H100 GPUs and its impact on pricing is one of greater accessibility, diversified application, and evolving business models. As the supply of these GPUs stabilizes and broadens across various sectors and regions, the pricing dynamics are likely to become more competitive and favorable for a wider range of users.

Comparative Price Analysis: Nvidia H100 vs. Other GPUs

Nvidia H100 vs. Nvidia H200

- The Nvidia H100 and H200 represent two of Nvidia’s flagship GPUs. The choice between these GPUs depends on specific requirements, such as data science applications or game development needs. While both GPUs offer high performance, the selection criteria are based on their distinct capabilities tailored for different professional applications.

Nvidia H100 vs. Nvidia A100

- Price-wise, the Nvidia A100 series presents a varied range. For instance, the 80GB model of the A100 is priced at approximately $17,000, whereas the 40GB version can cost as much as $9,000. This pricing structure offers a comparison point against the H100’s market position.

- In terms of performance, especially for scientific computing, the H100 and A100 are relatively matched. However, the A100 stands out for its high memory bandwidth and large cache, making it a strong choice for data-intensive tasks. Conversely, the H100 may have advantages in areas like gaming, attributed to its lower power consumption and faster clock speeds, potentially leading to more efficient and smoother gameplay.

Nvidia H100 vs. Other Competitors

- The Nvidia H100 has been compared with other high-end GPUs like Biren’s BR104, Intel’s Sapphire Rapids, Qualcomm’s AI 100, and Sapeon’s X220. These comparisons are crucial for understanding the H100’s standing in the broader high-performance GPU market.

- Technologically, the H100 marks a significant advancement, being manufactured on TSMC’s 4N process with 80 billion transistors. It offers up to 9 times faster speed than the A100, highlighting its superiority in certain applications. The H100 is also noted for being the first truly asynchronous GPU, which extends its capabilities beyond those of the A100.

This section of the article provides a detailed analysis of the Nvidia H100’s pricing and performance in comparison to other leading GPUs in the market. It serves to inform tech professionals and enthusiasts about the H100’s relative position and value proposition within the competitive landscape of high-performance GPUs.

Cost Justification for Buyers: Nvidia H100

AI Research and Machine Learning

- Nvidia’s H100 GPU significantly elevates AI research and machine learning capabilities. Promising up to 9x faster AI training and up to 30x faster AI inference over its predecessor, the H100 becomes an essential tool for developing complex machine learning models, especially those utilizing Google’s Transformer Engine technology. This massive leap in performance justifies its cost for organizations aiming to lead in AI and ML innovations.

High-Performance Computing and Data Science

- The H100’s superior computational prowess is a boon for high-performance computing (HPC) and data science. With more Tensor and CUDA cores, higher clock speeds, and an enhanced 80GB HBM3 memory, it offers unprecedented speed and capacity for complex computations in healthcare, robotics, quantum computing, and data science. This makes the H100 a valuable investment for sectors where cutting-edge computational ability is critical.

Cloud Service Providers and Large-Scale Computing

- Cloud service providers stand to benefit significantly from the H100’s capabilities. The GPU’s advanced virtualization features allow it to be divided into seven isolated instances, enabling efficient resource allocation and enhanced data security through Confidential Computing. Furthermore, Nvidia’s DGX H100 servers and DGX POD/SuperPOD configurations, which utilize multiple H100 GPUs, offer scalable solutions for delivering AI services at an unprecedented scale. This scalability and efficiency make the H100 a strategic investment for cloud providers looking to offer competitive AI and machine learning services.

Server Manufacturers and Data Center Operators

- For server manufacturers and data center operators, the H100 introduces new design paradigms, especially in terms of power and thermal management. While it has a higher performance per watt, its 700W TDP requires robust cooling solutions and power infrastructure. However, the ability to efficiently power and cool H100-equipped servers offers a competitive edge, as evidenced by the ‘DGX-Ready’ badge for data centers. This capacity for high performance in demanding environments justifies the H100’s cost for data centers looking to host the most advanced computing solutions.

This section elucidates the value proposition of the Nvidia H100 for different user groups, demonstrating why its cost is justified given its unparalleled capabilities in various computing domains.

Jun 07,2024

Jun 07,2024  By Julien Gauthier

By Julien Gauthier