Nvidia H100

The NVIDIA H100 is the world’s premier GPU cloud, specifically designed for AI training and inference. Understanding the critical need for powerful computational resources to handle AI inference workloads, the NVIDIA H100 stands out as a top-tier solution.

Discover the Features of the NVIDIA H100

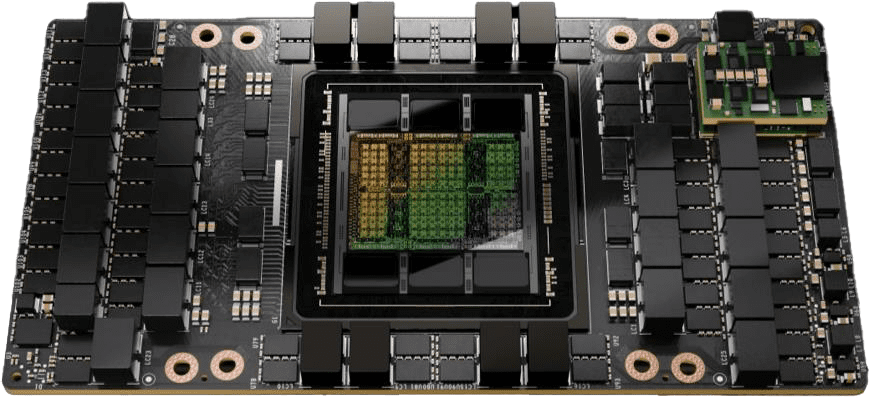

The NVIDIA H100, powered by NVIDIA’s latest AI chips, is perfect for large-scale AI tasks. With Hopper architecture and 80GB HBM2e memory at 2TB/s, it offers exceptional performance for demanding workloads.

With network speeds of 3.2 Tbps, it accelerates generative AI training, inference, and HPC workloads with remarkable speed. Reserve your GPU resources now for the NVIDIA H100!

Powerful Computing

Unleash unparalleled computing power for AI training, simulations, and data analysis with advanced architecture and extensive memory capacity.

Optimized for AI Workloads

NVIDIA H100 excels in AI workloads with its advanced architecture and high-performance computing capabilities, delivering optimized solutions for demanding tasks.

High-Performance AI Inference

NVIDIA H100 delivers exceptional AI inference performance with advanced architecture and high-speed computing capabilities, optimizing efficiency for demanding AI applications.

With H100, get the best ready Solution for AI and HPC workloads

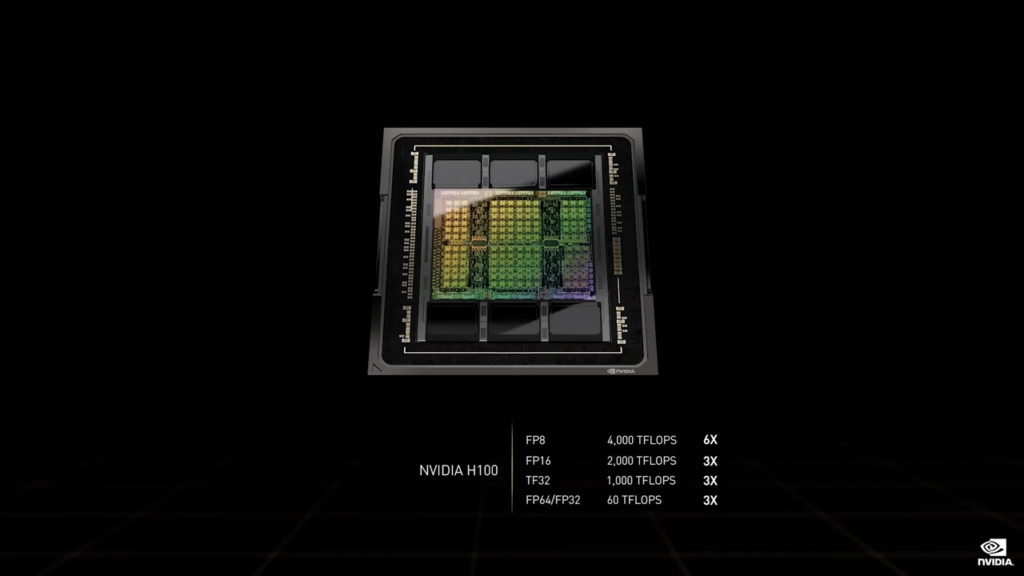

The H100 GPU emerges as a premier choice for intensive tasks such as generative AI and large language models (LLMs), demanding high memory and speed. With at least 3X the performance compared to its predecessor, it excels in both training and inference operations.

To explore its capabilities, you can access the H100 GPU Cloud today or reserve your cluster on Arkane Cloud. This platform offers flexible contracts tailored to your requirements, ensuring you can leverage the H100 for as long as needed.

Notably, the H100 on Arkane Cloud enhances efficiency and cost-effectiveness, providing nearly double the power for specific tasks compared to previous models, facilitating streamlined development and scaling of LLMs.

Why Choose Us

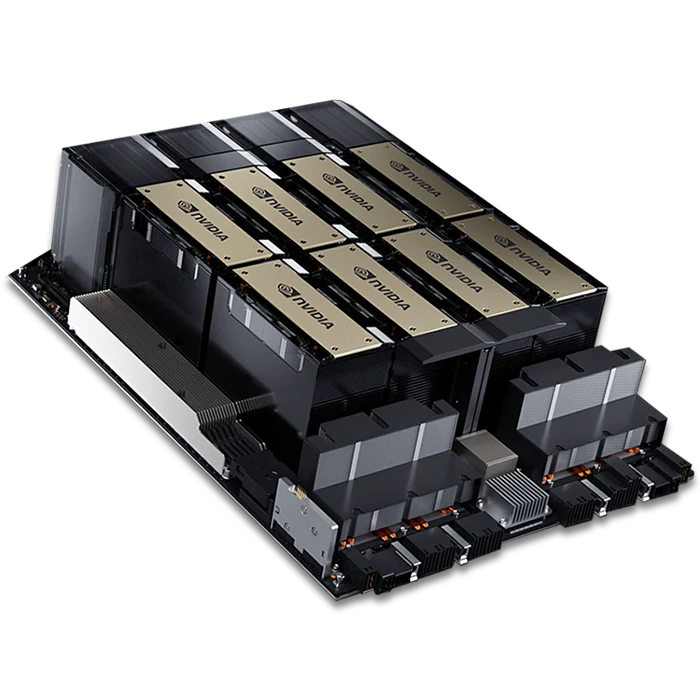

The rise in demand for HPC resources due to ML training, deep learning, and AI inference has made acquiring powerful GPU resources challenging for organizations. Whether in data science, machine learning, or GPU-based high-performance computing, accessing our H100 resources is straightforward.

Experience network speeds up to 3.2 Tbps, accelerating generative AI training, inference, and HPC tasks with unparalleled speed. Limited availability—reserve your NVIDIA H100 servers today for optimal performance!

Take Action: Reserve Your H100 Servers

Get Started

Ready to deploy your AI workloads ? Contact us today to explore how our Nvidia H100 solutions can empower your projects with unmatched speed, efficiency, and reliability.