Advanced Compute and Simulation with Nvidia H100

An Order-of-Magnitude Leap for Accelerated Computing

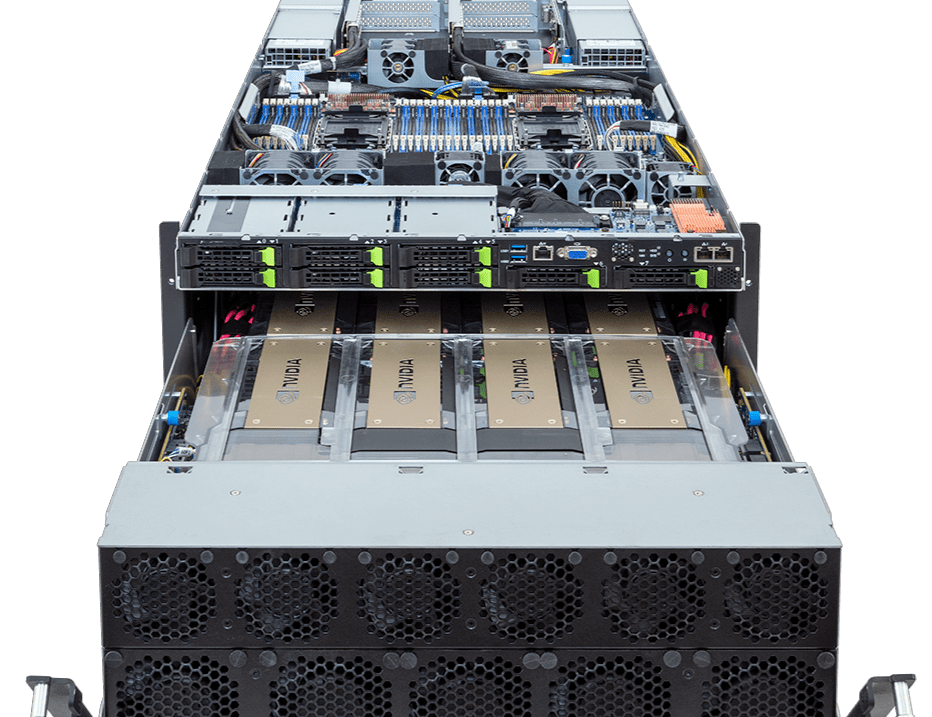

The Nvidia H100 Tensor Core GPU is not just an incremental update; it is a leap forward in the realm of accelerated computing. It embodies unprecedented performance, scalability, and security for diverse workloads. With capabilities like connecting up to 256 H100 GPUs through the NVLink® Switch System, it’s designed to tackle exascale workloads with ease. A standout feature is the dedicated Transformer Engine, which is pivotal in solving trillion-parameter language models, thus speeding up large language models (LLMs) by an extraordinary 30X compared to the previous generation. This enhancement is crucial for delivering cutting-edge conversational AI.

Revolutionizing Large Language Models (LLMs)

The H100 takes LLMs to new heights, supporting models up to 175 billion parameters. Utilizing the PCIe-based H100 NVL with NVLink bridge, Transformer Engine, NVLink, and 188GB HBM3 memory, it achieves optimal performance and scalability across data centers. This technology enables servers equipped with H100 NVL GPUs to boost GPT-175B model performance by up to 12X over the NVIDIA DGX™ A100 systems, while maintaining low latency in power-restricted data center environments.

Unmatched Training Efficiency for AI Models

H100’s fourth-generation Tensor Cores and Transformer Engine with FP8 precision contribute to a training speed that is up to 4X faster for GPT-3 (175B) models over the previous generation. The amalgamation of technologies like the fourth-generation NVLink, offering 900 GB/s of GPU-to-GPU interconnect; NDR Quantum-2 InfiniBand networking; PCIe Gen5; and NVIDIA Magnum IO™ software, collectively enable efficient scalability. This scalability ranges from small enterprise systems to extensive, unified GPU clusters, making the H100 a versatile tool for diverse AI training needs.

Elevating Inference and Data Analytics

In the realm of AI inference, the H100 brings about significant advancements, accelerating inference by up to 30X and achieving the lowest latency. The fourth-generation Tensor Cores enhance all precision levels, including FP64, TF32, FP32, FP16, INT8, and now FP8. This enhancement not only reduces memory usage but also increases performance while maintaining accuracy for LLMs, making the H100 a cornerstone in modern AI inference tasks.

Furthermore, the H100 stands out in the field of data analytics, a crucial part of AI application development. It confronts the challenge of large datasets scattered across multiple servers, where traditional CPU-only servers falter due to a lack of scalable computing performance. The H100, with its 3 TB/s of memory bandwidth per GPU and enhanced scalability features like NVLink and NVSwitch™, is uniquely capable of managing data analytics with high performance and efficiency. This capability is further bolstered by NVIDIA Quantum-2 InfiniBand, Magnum IO software, GPU-accelerated Spark 3.0, and NVIDIA RAPIDS™, making the H100 an unparalleled solution for massive data analytics workloads.

Optimizing Data Center Resource Utilization

In data centers, maximizing the utilization of compute resources is a critical goal. The H100’s second-generation Multi-Instance GPU (MIG) technology enables IT managers to achieve this by securely partitioning each GPU into as many as seven separate instances. This feature not only maximizes utilization but also supports confidential computing, allowing secure, end-to-end, multi-tenant usage. This capability is especially beneficial in cloud service provider (CSP) environments, where optimal utilization and security are paramount.

The Power of H100 in AI and Machine Learning

The Nvidia H100 Tensor Core GPU has emerged as a groundbreaking force in the AI and machine learning landscape. Its capabilities, particularly in handling large language models (LLMs) and generative AI, are reshaping what’s possible in these fields.

One of the most notable achievements of the H100 is its performance in industry-standard benchmarks. In a recent MLPerf benchmark, a cluster of 3,584 H100 GPUs, housed at the cloud service provider CoreWeave, completed a massive GPT-3-based benchmark in just 11 minutes. This feat not only demonstrates the raw power of the H100 but also its ability to handle large-scale AI tasks efficiently.

The H100’s excellence extends across all eight tests in the latest MLPerf training benchmarks, especially in generative AI. This performance is significant as it’s delivered both per-accelerator and at-scale in massive servers, highlighting the H100’s adaptability and scalability in varied AI environments. A prime example of this is CoreWeave’s implementation, where the cluster of H100 GPUs, co-developed with startup Inflection AI, showcased state-of-the-art generative AI and LLMs capabilities.

Moreover, the H100 has shown unparalleled performance across a spectrum of AI tasks, including large language models, recommenders, computer vision, medical imaging, and speech recognition. This versatility is crucial in an era where diverse AI applications are the norm, not the exception.

The training capabilities of the H100 are particularly noteworthy. Training large AI models typically requires numerous GPUs working in tandem, and the H100 has set new performance records in this regard. With optimizations across the full technology stack, the H100 has enabled near-linear performance scaling on demanding LLM tests, ranging from hundreds to thousands of GPUs.

Furthermore, the performance delivered by CoreWeave from the cloud is comparable to that of an NVIDIA AI supercomputer in a local data center, thanks to the low-latency networking of the NVIDIA Quantum-2 InfiniBand. This achievement is a testament to the H100’s capability to deliver high-end performance in cloud-based environments, a critical consideration for modern AI applications.

Breaking Barriers in High-Performance Computing (HPC)

The Nvidia H100 GPU marks a significant evolution in the field of high-performance computing (HPC), pushing the boundaries of computational capabilities. Equipped with fourth-generation Tensor Cores and a dedicated Transformer Engine with FP8 precision, the H100 provides up to 4X faster training for GPT-3 (175B) models over the previous generation. This enhancement is vital for complex AI models that require extensive computation and precision.

The H100 is engineered for scalability, which is critical in HPC environments. It combines technologies such as fourth-generation NVLink, offering 900 GB/s of GPU-to-GPU interconnect, NDR Quantum-2 InfiniBand networking for accelerated communication across nodes, PCIe Gen5, and NVIDIA Magnum IO™ software. This combination ensures that the H100 can deliver efficient scalability, ranging from small enterprise systems to massive, unified GPU clusters.

One of the most groundbreaking aspects of the H100 is its capability to democratize access to exascale high-performance computing. Deploying H100 GPUs at a data center scale brings next-generation exascale computing and trillion-parameter AI models within the reach of researchers across various fields. This level of computational power was once reserved for only the most advanced research institutions, but with the H100, it’s now accessible to a broader range of scientific and academic communities.

In addition to its prowess in HPC, the H100 extends NVIDIA’s leadership in AI inference. It introduces several advancements that accelerate inference by up to 30X and deliver the lowest latency. The improvement in inference performance is crucial for applications that rely on real-time data processing and decision-making. The H100’s fourth-generation Tensor Cores speed up all precisions, including FP64, TF32, FP32, FP16, INT8, and now FP8. This advancement is essential for reducing memory usage and increasing performance while maintaining accuracy for large language models.

Overall, the Nvidia H100 GPU represents a leap forward in high-performance computing, offering unprecedented computational power, scalability, and efficiency. Its impact extends across various sectors, enabling new possibilities in research, data analysis, and AI model development.

Transformative Effects in Data Science and Analytics

In the realm of data science and analytics, the Nvidia H100 GPU stands as a transformative force, redefining the benchmarks of data processing and analysis. The H100 is specifically engineered to address the challenges posed by the ever-increasing scale and complexity of data in modern AI application development.

Data analytics, a core component of AI development, often consumes a significant portion of development time, primarily due to the handling of large datasets distributed across multiple servers. Traditional scale-out solutions, typically relying on commodity CPU-only servers, struggle under the weight of these large datasets due to a lack of scalable computing performance. This is where the H100 GPU comes into play. It is designed to deliver the necessary compute power – complemented by 3 terabytes per second (TB/s) of memory bandwidth per GPU – to manage these immense datasets effectively. This capability is further enhanced by technologies such as NVLink and NVSwitch™, providing the necessary scalability to address the demands of modern data analytics.

The introduction of new DPX instructions with the H100 represents another leap in data science capabilities. These instructions considerably speed up dynamic programming algorithms, a critical component in fields such as healthcare, robotics, quantum computing, and, most importantly, data science. The significance of these advancements cannot be overstated, as dynamic programming algorithms are at the heart of numerous data-intensive applications, from predictive modeling to statistical analyses.

Moreover, data scientists rely heavily on GPUs like the H100 for rapid data analysis and model training. While the H100 may have slightly less memory compared to some other models, it excels in data manipulation and analysis performance. This attribute makes it particularly suitable for handling big data projects, which are increasingly common in today’s data-driven world.

Overall, the Nvidia H100 GPU emerges as a pivotal tool in the data science and analytics landscape, offering unparalleled performance and scalability. Its ability to handle massive datasets with high efficiency and its improvements in dynamic programming algorithm processing represent a significant shift in how data science projects can be approached, leading to more effective and efficient outcomes.

Enhancing Cloud Computing Environments

The Nvidia H100 GPU’s introduction to the cloud computing environment marks a significant milestone in secure and efficient computing. Its capability to support confidential computing, a relatively new concept in cloud computing, is a groundbreaking development. Confidential computing refers to the protection of data in use by performing computation in a hardware-based, attested trusted execution environment (TEE). The H100 is the first-ever GPU to introduce support for this form of computing, offering a substantial upgrade in terms of security and data integrity.

In cloud environments, where multi-tenancy and data security are critical concerns, the H100 GPU provides a robust solution. It effectively isolates workloads in virtual machines (VMs) from the physical hardware and from each other, thus enhancing security. This advancement is crucial, considering the potential security risks like in-band attacks, side-channel attacks, and physical attacks that could compromise data and applications. Confidential computing with the H100 is a game-changer in this context, as it ensures the protection of sensitive data and intellectual property, which are often part of AI models and data sets used in cloud environments.

The implementation of confidential computing on the H100 required NVIDIA to develop new secure firmware and microcode, as well as enable confidential computing paths in the CUDA driver. This comprehensive approach establishes a complete solution encompassing both hardware and software aspects, thereby maintaining the integrity and protection of code and data.

Furthermore, the H100 GPU’s hardware security is anchored in the NVIDIA Hopper architecture, which includes the H100 Tensor Core GPU chip and 80 GB of High Bandwidth Memory 3 (HBM3). This architecture supports various modes of operation for confidential computing, including a standard operation mode (CC-Off) and a fully activated confidential computing mode (CC-On). In CC-On mode, all confidential computing features are active, enhancing security against potential attacks.

Operating the H100 GPU in confidential computing mode involves a collaborative process between the GPU and CPUs that support confidential VMs (CVMs). This process ensures that data and code are securely processed, with the CPU TEE extending its trust to the GPU, thereby enabling a secure environment for running CUDA applications. The NVIDIA driver plays a key role in this process, moving data to and from GPU memory through an encrypted buffer, thus maintaining the confidentiality of the data.

In summary, the Nvidia H100 GPU’s incorporation into cloud computing environments not only enhances computational power and efficiency but also brings a new level of security and trustworthiness to cloud-based AI and data processing tasks.

Impact on Various Industries and Research Areas

The Nvidia H100 GPU’s impact spans various industries and research areas, showcasing its versatility and power in advancing computational tasks across different fields.

A. Healthcare In the healthcare sector, the H100’s new DPX instructions significantly speed up dynamic programming algorithms. This advancement is particularly beneficial in areas like DNA sequence alignment and protein alignment, crucial for understanding genetic diseases and developing new treatments. The ability to process vast amounts of genetic data rapidly can lead to breakthroughs in personalized medicine and faster drug discovery processes.

B. Robotics In robotics, the H100 GPU accelerates the development and functioning of sophisticated algorithms that are essential for autonomous systems. These systems require real-time processing of vast sensory data to make quick decisions. The H100’s computational power enables more efficient and intelligent robotic systems, which can be applied in manufacturing, logistics, and even in personal care robots.

C. Data Science In data science, the H100’s ability to handle large datasets and complex algorithms makes it an invaluable tool. It allows data scientists to analyze and process data more efficiently, leading to quicker insights and the ability to handle more complex models. This capability is vital for industries relying on big data, such as finance, marketing, and urban planning.

D. AI and Generative AI The H100 GPU plays a critical role in AI, particularly in the training and deployment of large language models (LLMs) like OpenAI’s GPT models. These models, which are used for a variety of tasks including summarization, translation, and content generation, benefit from the H100’s enhanced training capabilities. Additionally, in generative AI, models like Stable Diffusion that generate images from text prompts, gain from the H100’s powerful computational abilities.

E. Scientific Computing In scientific computing, the H100 GPU contributes significantly to research areas like climate science, cosmology, and molecular modeling. For instance, it aids in climate atmospheric river identification, cosmology parameter prediction, and quantum molecular modeling. The H100’s computational power allows researchers to run more accurate simulations, leading to deeper insights and understanding in these complex fields.

In conclusion, the Nvidia H100 GPU’s diverse applications across these industries underline its role as a transformative technology. Its ability to accelerate and enhance computations is enabling advancements and innovations in fields critical to the future of technology and science.

Jun 10,2024

Jun 10,2024  By Julien Gauthier

By Julien Gauthier