Image Super-Resolution using AI Generative Models

Image Super-Resolution using AI Generative Models

The Evolution of Image Quality in the Digital Era

The trajectory of image quality in the digital age is a narrative of revolutionary advancements and transformative influences on visual media. In the late 1980s, the digital revolution began reshaping photography, transitioning from analog methods reliant on chemical processes to digital technologies for image capture and storage. This transformation, fueled by the advent of consumer digital cameras and the introduction of Adobe Photoshop in 1990, marked a significant shift. Photoshop, particularly, extended the capabilities of traditional photography, allowing for intricate manipulation of image structure and content, thus challenging the established norms of photographic authenticity.

As the new millennium unfolded, the impact of digital photography became increasingly evident. By the early 2000s, digital imagery had begun to dominate professional photography, with newspapers and magazines transitioning to digital workflows. This shift was propelled by the expediency of digital image transmission and editing, highlighting the growing preference for digital methods over traditional film photography.

However, it was the proliferation of smartphones, starting with Apple’s first iPhone in 2007, that truly democratized photography. These devices, coupled with social media platforms like Facebook, Twitter, and Instagram, facilitated instantaneous image sharing, creating an extensive archive of digital imagery capturing a myriad of moments and places. This ubiquity of digital photography extended its reach into various domains, including commercial, governmental, and military, where it played pivotal roles ranging from public surveillance to aiding in criminal identification through facial-recognition software.

The 21st century also witnessed the integration of photography into the broader digital communication and contemporary art realms. The convergence of still digital photographs with moving video images and the emergence of web design tools for animation and motion control have created a multifaceted creative space. In this era, photography is not just a standalone art form but a vital component of multimedia storytelling and digital communication, enhancing its significance as a visual medium.

This section of the article outlines the historical progression and pivotal moments that have defined the evolution of image quality in the digital era, setting the stage for the emergence and impact of AI in image super-resolution.

Understanding Image Super-Resolution

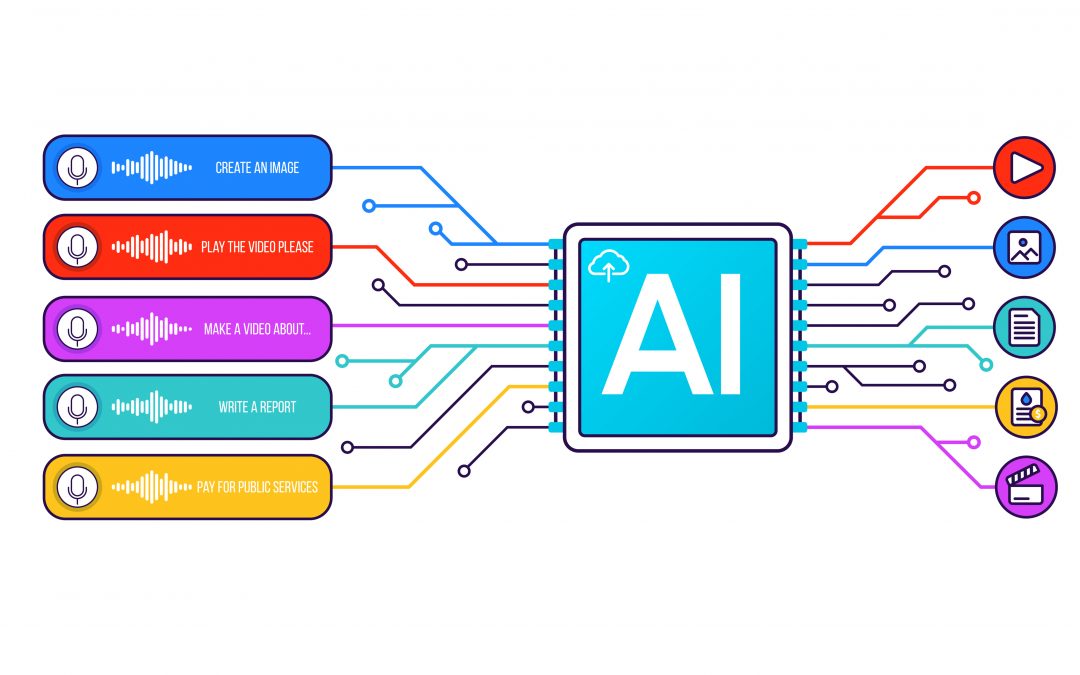

Super-Resolution (SR) in the realm of digital imagery signifies the process of enhancing the resolution of an image. This enhancement, often termed as upsampling, involves increasing the pixel density of an image, thereby augmenting its clarity and detail. The journey from a low-resolution (LR) image to a high-resolution (HR) one is achieved through various sophisticated methods, predominantly leaning on the principles of machine learning and more specifically, deep learning.

In the domain of SR, two primary categories exist: Single-Image and Multi-Image Super-Resolution. Single-Image SR deals with enhancing the resolution of an individual image, often plagued by the emergence of artificial patterns due to the limited input information. This can lead to inaccuracies, particularly in sensitive applications like medical imaging where precision is paramount. On the other hand, Multi-Image SR utilizes multiple LR images of the same scene or object to map to a single HR image, typically yielding better performance due to the richness of information available. However, this method is often hampered by its higher computational demands and practicality issues in obtaining multiple LR images.

The evaluation of SR methods transcends mere visual assessments due to the subjective nature of such evaluations. Universal quantitative metrics like Peak Signal-to-Noise Ratio (PSNR) and Structural SIMilarity (SSIM) Index are thus employed to objectively measure and compare the performance of various SR methods.

Different strategies in image SR mainly revolve around the techniques of upsampling used to achieve the final HR output. These strategies include Pre Upsampling, where the LR image is first upscaled to the required HR dimensions before being processed by a deep learning model, as exemplified by the VDSR network. Post Upsampling, in contrast, involves enhancing the LR image using a deep model before upscaling it to HR dimensions, a technique used in the FSRCNN model. Progressive Upsampling takes a more gradual approach, particularly beneficial for large upscaling factors, where the LR image is incrementally upscaled to meet the HR criteria. The LapSRN model is a prime example of this approach, employing a cascade of convolutional networks to progressively predict and reconstruct HR images.

These intricate methodologies showcase the versatility and complexity inherent in the field of image super-resolution, reflecting a blend of technological innovation and practical application challenges.

Applications and Impact of AI Super-Resolution

The field of AI-powered image super-resolution has seen a meteoric rise in practical applications and impact, largely due to advancements in deep learning techniques. This section explores the broad spectrum of real-world applications and the transformative impact of AI in super-resolution technology.

Revolutionizing Various Sectors with AI Super-Resolution

AI-driven image super-resolution is playing a pivotal role across multiple sectors. In medical imaging, it assists in enhancing the resolution of diagnostic images, contributing significantly to more accurate diagnoses and better patient care. The technology also finds critical applications in satellite imaging, enhancing the quality of images used in environmental monitoring and urban planning. Additionally, in fields like surveillance and security, super-resolution aids in obtaining clearer images, which is crucial for accurate monitoring and identification.

Advancements in Deep Learning Techniques

Deep learning models, particularly Convolutional Neural Networks (CNNs), Generative Adversarial Networks (GANs), and Autoencoders, have been instrumental in the progress of image super-resolution. These models learn from extensive datasets to recognize intricate patterns, thereby producing images that are more realistic and visually appealing than ever before.

Real-Time Image Enhancement

The development of real-time image enhancement is another significant stride in this domain. This technology is particularly beneficial in applications like video conferencing, surveillance, and autonomous vehicles. The capability to process video streams in real-time is largely thanks to advancements in GPU hardware and parallel processing techniques.

Low-light Image Enhancement

Low-light conditions pose a unique challenge in image processing. AI-based techniques are being actively developed to enhance the visibility of images captured under such conditions, improving brightness, contrast, and sharpness.

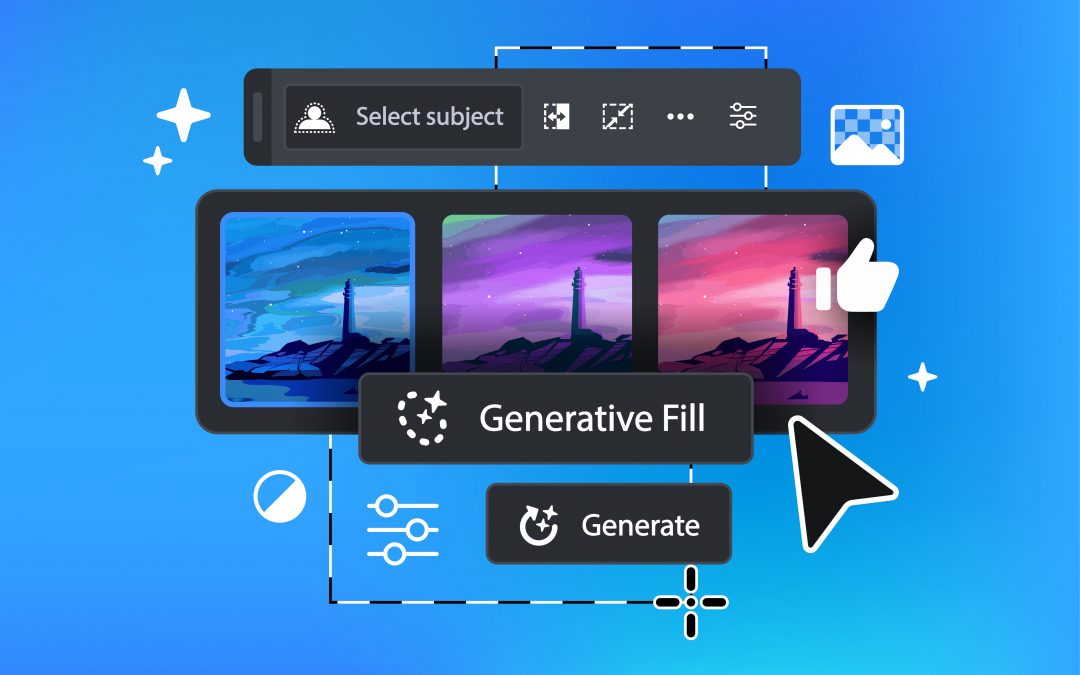

User-friendly AI Image Enhancement Platforms

Platforms like Deep-image.ai epitomize the user-friendliness and accessibility of AI in image enhancement. These platforms leverage deep learning techniques for various tasks including image denoising, super-resolution, and colorization, making high-quality image processing accessible to a broader audience.

Mobile Image Enhancement

The proliferation of mobile technology has led to a growing trend of developing AI-powered image enhancement algorithms for mobile devices. These algorithms are designed to improve the quality of images captured by mobile cameras, enhancing their color, sharpness, and contrast, thereby democratizing high-quality image processing.

Future Prospects

The advancements in deep learning and the availability of powerful hardware promise even more exciting developments in AI-powered image enhancement. The emergence of platforms like Deep-image.ai is just the beginning of what is anticipated to be a transformative revolution in image processing and super-resolution techniques.

This exploration of the applications and impact of AI in image super-resolution underscores the technology’s profound influence across diverse sectors and its potential for future advancements.

Real-Time and Mobile Image Enhancement with AI Super-Resolution

The advent of AI in the realm of image super-resolution (ISR) has ushered in a new era of possibilities, particularly in the context of real-time and mobile applications. This section delves into the latest advancements and the implications they hold for practical, everyday use.

Breakthroughs in Real-Time Super-Resolution on Mobile Devices

The development of AI-driven ISR models has marked a turning point in real-world image processing. Traditional deep learning-based ISR methods, while effective, have been limited by high computational demands, making them unsuitable for deployment on mobile or edge devices. However, recent innovations have led to the creation of ISR models that are not only computationally efficient but also tailored to handle a wide range of image degradations commonly encountered in real-world scenarios. These models, capable of real-time performance on mobile devices, are a leap forward in addressing the complexities of real-world image enhancement.

Addressing Complex Real-World Degradations

One of the significant challenges in mobile ISR is dealing with various real-world degradations such as camera sensor noise, artifacts, and JPEG compression. The complexity of these degradations often renders conventional image processing techniques ineffective. To tackle this, novel data degradation pipelines have been developed, aiming to recover LR data more accurately from real scenes. This approach considers the multifaceted nature of real-world degradations and improves the performance of ISR in practical applications.

Achieving High-Performance ISR on Mobile Devices

Recent advancements have led to the creation of lightweight models specifically designed for mobile devices. These models, like the InnoPeak_mobileSR, are optimized for computational efficiency, requiring significantly fewer parameters and FLOPs, and capable of processing images in just milliseconds. This breakthrough allows for high-performance ISR on mobile devices, with the potential to achieve up to 50 frames per second for video super-resolution (VSR) applications. Such models demonstrate not only improved perceptual quality but also comparable or superior performance to baseline and state-of-the-art methods in ISR.

These developments in real-time and mobile image enhancement signify a pivotal shift in how AI super-resolution is applied, making it more accessible and effective for everyday use.

Real-Time Enhancement and Mobile Applications

Real-Time Image Enhancement

The emergence of real-time image enhancement, powered by artificial intelligence (AI), marks a significant advancement in the field of image processing. This innovation focuses on enhancing video streams in real-time, making it an ideal solution for various applications such as video conferencing, surveillance, and autonomous vehicles. The feasibility of processing these video streams in real time has been made possible through advancements in GPU hardware and parallel processing techniques.

Mobile Image Enhancement

Parallel to the developments in real-time enhancement, there has been a significant surge in AI-powered image enhancement algorithms tailored for mobile devices. These algorithms are engineered to augment the quality of images captured by mobile cameras, focusing on improvements in color, sharpness, and contrast.

The increasing ubiquity of smartphones in everyday life, combined with continuous advancements in camera technology and imaging pipelines, has led to an exponential increase in the number of images captured. However, despite the high-quality images produced by modern smartphones, they often suffer from artifacts or degradation due to the limitations of small camera sizes and lenses. To address these issues, deep learning methods have been applied for image restoration, effectively removing artifacts such as noise, diffraction, blur, and HDR overexposure. However, the high computational and memory requirements of these methods pose challenges for real-time applications on mobile devices.

To tackle these challenges, recent advancements include the development of LPIENet, a lightweight network for perceptual image enhancement specifically designed for smartphones. This model addresses the limitations of previous approaches by requiring fewer parameters and operations, thus making it suitable for real-time applications. Deployed on commercial smartphones, LPIENet has demonstrated the capability to process 2K resolution images in under a second on mid-level devices.

In addition to the technical requirements for real-time enhancement, image restoration algorithms integrated into cameras must meet rigorous standards in terms of quality, robustness, computational complexity, and execution time. These algorithms are required to consistently improve the input image under any circumstances.

While deep learning-based image restoration algorithms have shown great potential, many of them fail to meet the necessary criteria for integration into modern smartphones due to their computational complexity. This has led to a shift in focus towards developing more efficient algorithms, such as the lightweight U-Net architecture characterized by the inverted residual attention (IRA) block. These architectures are optimized for parameter usage and computational cost, allowing for real-time performance on current smartphone GPUs at FullHD image resolution.

These advancements in real-time image enhancement and mobile applications reflect the continuous evolution of AI in image processing, with a clear trend towards developing more efficient and powerful algorithms capable of operating on a variety of platforms, including mobile devices.