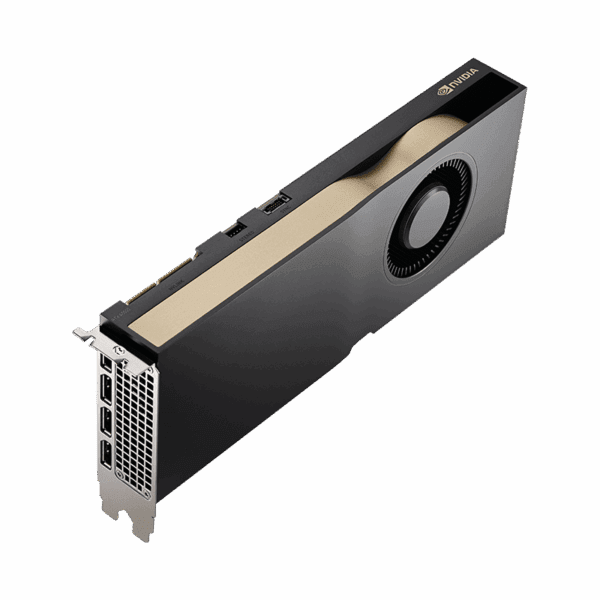

RTX A5000: An Optimized GPU for machine learning and rendering

A Closer Look at the NVIDIA RTX A5000

Selecting the best GPU for deep learning and high-performance computing involves balancing performance, efficiency, and cost. The NVIDIA RTX A5000 stands out as a versatile choice for various applications, but it’s essential to understand its capabilities and ideal use cases.

Overview of the NVIDIA RTX A5000

Built on NVIDIA’s Ampere architecture, the RTX A5000 offers substantial performance improvements over previous generations. It caters to designers, engineers, artists, and researchers, making it a versatile tool. Unlike newer Hopper GPUs specifically designed for AI, the A5000 excels in diverse HPC workloads.

This article delves into the RTX A5000’s specifications, benchmark performance, and overall value.

Specifications of the NVIDIA RTX A5000

- The NVIDIA RTX A5000 features the GA102 GPU, part of the Ampere architecture, manufactured using an 8 nm process. With 28.3 billion transistors in a 628 mm² die, it delivers massive parallel processing power in a compact form.

Key specifications include:

- 64 Streaming Multiprocessors (SMs): Each SM contains 128 CUDA cores, totaling 8,192 CUDA cores, which handle most computations for graphics rendering, AI acceleration, and general-purpose tasks.

- 256 Tensor Cores: These accelerate deep learning by handling multiple data points simultaneously, supporting mixed-precision computing, and optimizing throughput.

- 64 RT Cores: Dedicated to real-time ray tracing, these cores enhance visual effects in 3D graphics by efficiently simulating light behavior.

- 24GB GDDR6 Memory: Ensures smooth operation with large datasets, reducing the need for frequent data swaps.

- 384-bit Memory Interface: Provides a memory bandwidth of 768 GB/s, facilitating real-time data processing and complex simulations.

Applications of the NVIDIA RTX A5000

The NVIDIA RTX A5000 is a powerful professional graphics card designed for demanding workloads such as 3D rendering, video editing, visual effects, and AI-powered applications. It is also used in scientific visualization, data science, and virtual reality environments.

Deep Learning Acceleration

The A5000’s Tensor cores accelerate deep learning by processing large data volumes in parallel, supporting mixed-precision computing, and optimizing throughput for training and inference tasks.

Ray Tracing Efficiency

RT cores enhance efficiency by quickly computing light ray intersections and offloading intensive lighting, shadow, and reflection calculations, allowing for real-time, high-fidelity rendering.

Memory and Bandwidth

The A5000’s 24GB GDDR6 memory and 384-bit interface ensure smooth handling of extensive datasets and complex simulations, reducing the need for constant data transfers and speeding up operations.

Pricing and Availability

The NVIDIA RTX A5000 offers good value for professionals, balancing cost and performance. Pricing on Arkane Cloud starts at $1 per hour or $730 per month, making it a cost-efficient option for various applications. Get access to our RTX A5000.

Is the RTX A5000 Good for Deep Learning?

The RTX A5000 excels at deep learning tasks due to its ample memory, tensor cores, and support for CUDA, a parallel computing platform. This makes it ideal for training and deploying complex deep learning models efficiently.

Other Use Cases and Applications

- Gaming: The A5000 handles 4K resolution smoothly, offering enhanced visuals and frame rates with DLSS technology.

- Professional Applications: In fields like architecture, engineering, and media production, the A5000’s high memory capacity and processing power enable faster rendering and more complex simulations.

- VR and AR: The A5000’s capabilities ensure high frame rates and low latency, crucial for developing immersive experiences and simulations in virtual and augmented reality.

Jun 26,2024

Jun 26,2024  By Julien Gauthier

By Julien Gauthier