High-Performance Computing with the Nvidia H100

Introduction

In the realm of computational technology, the evolution of high-performance computing (HPC) has been nothing short of revolutionary. At the core of this transformation lies the Graphics Processing Unit (GPU), a key player in the acceleration of complex computations across various domains. Historically, the term GPU was popularized in 1999 by Nvidia with its GeForce 256, renowned for its proficiency in graphics transformation and 3D rendering capabilities. This was a pivotal moment, as it marked the transition of GPUs from solely graphical tasks to broader computational realms.

GPUs have since become the bedrock of artificial intelligence and machine learning, offering unparalleled processing power essential for today’s demanding applications. Before the widespread use of GPUs, machine learning processes were slower, less accurate, and often inadequate for complex tasks. GPUs transformed this landscape, enabling rapid processing of large neural networks and deep learning algorithms, which are now integral to technologies like autonomous driving and facial recognition.

The transition of GPUs into HPC is one of the most significant trends in enterprise technology today. Cloud computing, combined with the power of GPUs, creates a seamless and efficient process for tasks traditionally reserved for supercomputers. This synergy not only saves time but also significantly reduces costs, making it an invaluable asset in various sectors.

Moreover, GPUs have demonstrated their ability to outperform multiple CPUs, especially in tasks requiring parallel processing. For example, processing high-resolution images or videos, which would take years on a single CPU, can be accomplished in a day with a few GPUs. This capability of handling massive volumes of data at incredible speeds has opened doors to previously impossible tasks.

In 2006, Nvidia further pushed the boundaries with the introduction of CUDA, a parallel computing platform that allows developers to utilize GPUs more efficiently. CUDA enables the partitioning of complex problems into smaller, independent tasks, harnessing the full potential of Nvidia’s GPU architecture for a wide range of applications, from scientific research to real-time data processing.

As we delve deeper into the specifics of the Nvidia H100 and its impact on high-performance computing, it’s crucial to recognize this backdrop of GPU evolution. The H100 represents the latest in a lineage of technological advancements, standing as a testament to the relentless pursuit of computational excellence and efficiency.

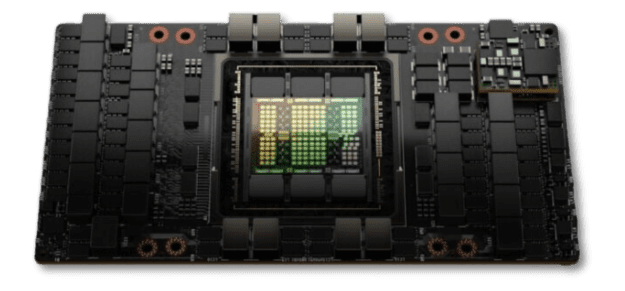

Overview of Nvidia H100 GPU

The NVIDIA H100 Tensor Core GPU, representing the ninth generation in NVIDIA’s lineup, is a groundbreaking advancement in data center GPU technology. Designed to significantly elevate performance for AI and HPC applications, the H100 leapfrogs its predecessor, the A100, in terms of efficiency and architectural design. This transformative step is particularly evident in mainstream AI and HPC models, where the H100, equipped with InfiniBand interconnect, achieves up to 30 times the performance of the A100. The introduction of the NVLink Switch System further amplifies its capabilities, targeting complex computing workloads requiring model parallelism across multiple GPU nodes.

In 2022, NVIDIA announced the NVIDIA Grace Hopper Superchip, incorporating the H100 GPU. This product is engineered for terabyte-scale accelerated computing, offering a performance boost up to tenfold for large-model AI and HPC. The H100 pairs with the NVIDIA Grace CPU, utilizing an ultra-fast chip-to-chip interconnect and delivering an impressive 900 GB/s of total bandwidth, significantly outperforming current server standards.

The H100’s architecture includes several innovative features:

- Fourth-Generation Tensor Cores: These cores are up to six times faster than those in the A100, supporting advanced data types like FP8, which enhances deep learning network performance.

- Enhanced Processing Rates: The H100 boasts a threefold increase in IEEE FP64 and FP32 processing rates compared to the A100, owing to faster performance per streaming multiprocessor (SM) and increased SM counts.

- Thread Block Cluster Feature: This new feature extends the CUDA programming model, allowing for synchronized data exchange across multiple SMs, optimizing parallel processing.

- Distributed Shared Memory and Asynchronous Execution: These features enable efficient SM-to-SM communication and data transfer, bolstering the GPU’s processing capability.

- Transformer Engine: A combination of software and custom Hopper Tensor Core technology, this engine significantly accelerates transformer model training and inference, offering up to 9x faster AI training and 30x faster AI inference on large language models compared to the A100.

- HBM3 Memory Subsystem: The H100 introduces the world’s first GPU with HBM3 memory, delivering a remarkable 3 TB/sec of memory bandwidth.

- L2 Cache Architecture: A 50 MB L2 cache reduces the frequency of memory accesses, enhancing data processing efficiency.

- Multi-Instance GPU (MIG) Technology: The second-generation MIG technology in the H100 provides substantially more compute capacity and memory bandwidth per instance, alongside introducing Confidential Computing at the MIG level.

- Confidential Computing Support: This new feature provides enhanced data security and isolation in virtualized environments, establishing the H100 as the first native Confidential Computing GPU.

- Fourth-Generation NVLink: This technology improves bandwidth for multi-GPU operations, offering a 50% general increase and 3x bandwidth enhancement for specific operations over the previous generation.

These architectural enhancements make the NVIDIA H100 a formidable tool in the high-performance computing landscape, setting new standards in processing power, efficiency, and security.

Advancements in AI and Machine Learning

The arrival of the NVIDIA H100 Tensor Core GPU ushers in a new era for artificial intelligence (AI) and machine learning (ML), marking a significant advancement from the previous-generation A100 SXM GPU. The H100 stands out with its capability to deliver three times the throughput on Tensor Core, including FP32 and FP64 data types, a result of the next-generation Tensor Cores, an increased number of streaming multiprocessors (SMs), and a higher clock frequency.

A key innovation in the H100 is its support for the FP8 data type and the integration of the new Transformer Engine, which together enable six times the throughput compared to the A100. The Transformer Engine, in particular, accelerates AI calculations for transformer-based models, such as large language models, which are pivotal in today’s AI advancements.

The H100’s performance in real-world deep learning applications varies by workload. For instance, language models generally see a greater benefit, with approximately four times the speedup compared to vision-based models, which experience around a two times speedup. Significantly, certain large language models that necessitate model parallelization can achieve up to a 30-fold increase in inference speed. This enhancement is particularly valuable for applications involving structured sparsity, such as in natural language processing, vision, and drug design, and for large-scale distributed workloads.

In terms of architecture, the H100 introduces several key improvements over its predecessor:

- Fourth-Generation Tensor Cores: Each SM of the H100 doubles the computational throughput of the A100 SM, enhancing the efficiency of fundamental deep learning building blocks like General Matrix Multiplications (GEMMs).

- Increased SMs and Clock Frequencies: With more SMs and higher operating frequencies, the H100 offers a substantial improvement in computational capacity.

- FP8 and Transformer Engine: The addition of the FP8 data type and the Transformer Engine not only quadruples computational rates but also intelligently manages calculations, optimizing both memory usage and performance while maintaining accuracy.

These advancements contribute to a significant overall upgrade for all deep learning applications, optimizing the H100 for the largest and most complex models. The H100’s ability to handle tasks involving large language, vision, or life sciences models marks it as a pivotal tool in the evolution of AI and ML, driving forward the boundaries of what is computationally possible.

High-Performance Computing (HPC) Applications

The NVIDIA H100 has emerged as a pivotal tool in High-Performance Computing (HPC), offering transformative advancements in various scientific and data analytic applications. The H100’s capacity to handle compute-intensive tasks effectively bridges the gap between traditional HPC workloads and the increasingly intertwined fields of AI and ML.

Enhanced Performance in Scientific Computing

The H100 has significantly impacted generative AI, particularly in training and deploying large language models (LLMs) like OpenAI’s GPT models and Meta’s Llama 2, as well as diffusion models like Stability.ai’s Stable Diffusion. These models, with their massive parameter sizes and extensive training data, demand a level of computational performance that transcends the capabilities of single GPUs or even single-node GPU clusters.

Reflecting the convergence of HPC and AI, the MLPerf HPC v3.0 benchmarks now include tests like protein structure prediction using OpenFold, atmospheric river identification for climate studies, cosmology parameter prediction, and quantum molecular modeling. These benchmarks highlight the H100’s ability to efficiently train AI models for complex scientific computing applications.

In recent MLPerf Training rounds, NVIDIA demonstrated the H100’s unprecedented performance and scalability for LLM training. It significantly improved the per-accelerator performance of the H100 GPU, leading to faster training times and reduced costs. This improvement extends to a variety of workloads, including text-to-image training, DLRM-dcnv2, BERT-large, RetinaNet, and 3D U-Net, setting new performance records at scale.

Applications in Data Analytics

In the realm of data analytics, particularly in financial risk management, the H100 has set new standards in efficiency and performance. During a recent STAC-A2 audit, a benchmark for compute-intensive analytic workloads in finance, H100-based solutions set several performance records. These achievements include faster processing times and better energy efficiency for tasks like Monte Carlo estimation and risk analysis.

Financial High-Performance Computing (HPC) has greatly benefited from the H100’s capabilities. The H100 enables efficient node strategies for intensive calculations like price discovery, market risk, and counterparty risk, significantly reducing the number of nodes required for such tasks. This reduction in nodes translates to higher performance and lower operational costs, highlighting the H100’s role in scaling up financial HPC with fewer resources.

Moreover, NVIDIA provides a comprehensive software layer for the H100, offering developers various tools and programming languages like CUDA C++ for optimized calculations. This approach, coupled with the H100’s performance, enables faster runtime for critical applications, underscoring its versatility and power in data analytics.

The H100 stands as a cornerstone in the NVIDIA data center platform, built to accelerate over 4,000 applications in AI, HPC, and data analytics. Its groundbreaking technology includes a peak performance of 51 TFLOPS for single precision and 26 TFLOPS for double-precision calculations, bolstered by 14,592 CUDA cores and 456 fourth-generation Tensor Core modules. With these features, the H100 not only triples the theoretical floating-point operations per second (FLOPS) of FP64 compared to the A100 but also enhances performance with dynamic programming instructions (DPX).

In summary, the NVIDIA H100 is revolutionizing the HPC landscape, providing unparalleled performance and efficiency across a wide array of scientific and data analytic applications. Its impact extends from complex scientific computations to intensive data analytics in finance, marking a new era in high-performance computing capabilities.

Security and Confidential Computing

The NVIDIA H100 Tensor Core GPU represents a significant advancement in the realm of secure and confidential computing. Confidential computing, a critical aspect of modern computing infrastructure, ensures the protection of data in use, addressing the vulnerabilities that persist in traditional data protection methods. The H100 stands as the first-ever GPU to introduce support for confidential computing, a leap forward in data security for AI and HPC environments.

NVIDIA Confidential Computing Using Hardware Virtualization

Confidential computing on the H100 is achieved through hardware-based, attested Trusted Execution Environments (TEE). The H100’s TEE is grounded in an on-die hardware root of trust (RoT). When operating in CC-On mode, the GPU activates hardware protections for both code and data, establishing a secure environment through:

- Secure and measured GPU boot sequence.

- Security protocols and data models (SPDM) session for secure driver connections.

- Generation of a cryptographically signed attestation report, ensuring the integrity of the computing environment.

Evolution of GPU Security

NVIDIA has continually enhanced the security features of its GPUs. Starting with AES authentication in the Volta V100 GPU, subsequent architectures like Turing and Ampere introduced encrypted firmware and fault injection countermeasures. The Hopper architecture, embedded within the H100, adds on-die RoT and measured/attested boot, reinforcing the security framework necessary for confidential computing. This comprehensive approach, encompassing hardware, firmware, and software, ensures the protection and integrity of both code and data in the H100 GPU.

Hardware Security for NVIDIA H100 GPUs

The H100 GPU’s confidential computing capabilities extend across multiple products, including the H100 PCIe, H100 NVL, and NVIDIA HGX H100. It supports three operational modes:

- CC-Off: Standard operation without confidential computing features.

- CC-On: Full activation of confidential computing features, including active firewalls and disabled performance counters to prevent side-channel attacks.

- CC-DevTools: Partial confidential computing mode with performance counters enabled for development purposes.

Operating NVIDIA H100 GPUs in Confidential Computing Mode

In confidential computing mode, the H100 GPU works with CPUs supporting confidential VMs (CVMs), ensuring that operators cannot access the contents of CVM or confidential container memory. The NVIDIA driver facilitates secure data movement to and from GPU memory through encrypted bounce buffers and signed command buffers and CUDA kernels. This system ensures that running CUDA applications on the H100 in confidential computing mode remains seamless and secure.

In summary, the NVIDIA H100 GPU’s introduction of confidential computing capabilities marks a transformative step in securing data during computation, catering to the increasing demand for robust security solutions in AI and HPC applications.

Case Studies and Real-world Applications

The NVIDIA H100 GPU is powering a wide range of industries, showcasing its versatility and robustness in various real-world applications. From enhancing research capabilities in educational institutions to revolutionizing cloud computing and driving innovation in multiple sectors, the H100 is proving to be a transformative technology.

Academic and Research Institutions

Leading universities and research institutions are harnessing the power of the H100 to advance their computational capabilities. Institutions like the Barcelona Supercomputing Center, Los Alamos National Lab, Swiss National Supercomputing Centre (CSCS), Texas Advanced Computing Center, and the University of Tsukuba are utilizing the H100 in their next-generation supercomputers. This integration of advanced GPUs into academic research is enabling these institutions to push the boundaries in fields such as climate modeling, astrophysics, and life sciences.

Cloud Computing and Service Providers

Major cloud service providers like Amazon Web Services, Google Cloud, Microsoft Azure, and Oracle Cloud Infrastructure are among the first to deploy H100-based instances. This deployment is set to accelerate the development and application of AI worldwide, particularly in areas requiring intense computational power, such as healthcare, autonomous vehicles, robotics, and IoT applications.

Manufacturing and Industrial Applications

In the manufacturing sector, applications like Green Physics AI are leveraging the H100 to predict the aging of factory equipment, aiming to make future plants more efficient. This tool provides insights into an object’s CO2 footprint, age, and energy consumption, enabling the creation of powerful AI models and digital twins that optimize factory and warehouse efficiency.

Robotics and AI Research

Organizations like the Boston Dynamics AI Institute are using the H100 for groundbreaking research in robotics. The focus is on developing dexterous mobile robots that can assist in various settings, including factories, warehouses, and even homes. This initiative requires advanced AI and robotics capabilities, which the H100 is uniquely positioned to provide.

Legal and Linguistic AI Applications

Startups are also harnessing the H100’s capabilities. Scissero, a legal tech company, employs a GPT-powered chatbot for drafting legal documents and conducting legal research. In language services, DeepL uses the H100 to enhance its translation services, offering AI-driven language solutions to clients worldwide.

Healthcare Advancements

In healthcare, the H100 is facilitating advancements in drug discovery and patient care. In Tokyo, the H100 is part of the Tokyo-1 supercomputer, accelerating simulations and AI for drug discovery. Hospitals and academic healthcare organizations globally are also among the first users of the H100, demonstrating its potential in improving healthcare outcomes.

Diverse University Research

Universities globally are integrating H100 systems for a variety of research projects. For instance, Johns Hopkins University’s Applied Physics Laboratory is training large language models, while the KTH Royal Institute of Technology in Sweden is enhancing its computer science programs. These use cases highlight the H100’s role in advancing educational and research endeavors across disciplines.

In conclusion, the NVIDIA H100 GPU is at the forefront of computational innovation, driving progress and efficiency across diverse sectors. Its impact is evident in the multitude of industries and disciplines it is transforming, solidifying its role as a cornerstone technology in the modern computational landscape.

Jun 06,2024

Jun 06,2024  By Julien Gauthier

By Julien Gauthier