Introduction to Nvidia A100: Features and Specifications

Introduction to Nvidia A100 and its Importance in Modern Computing

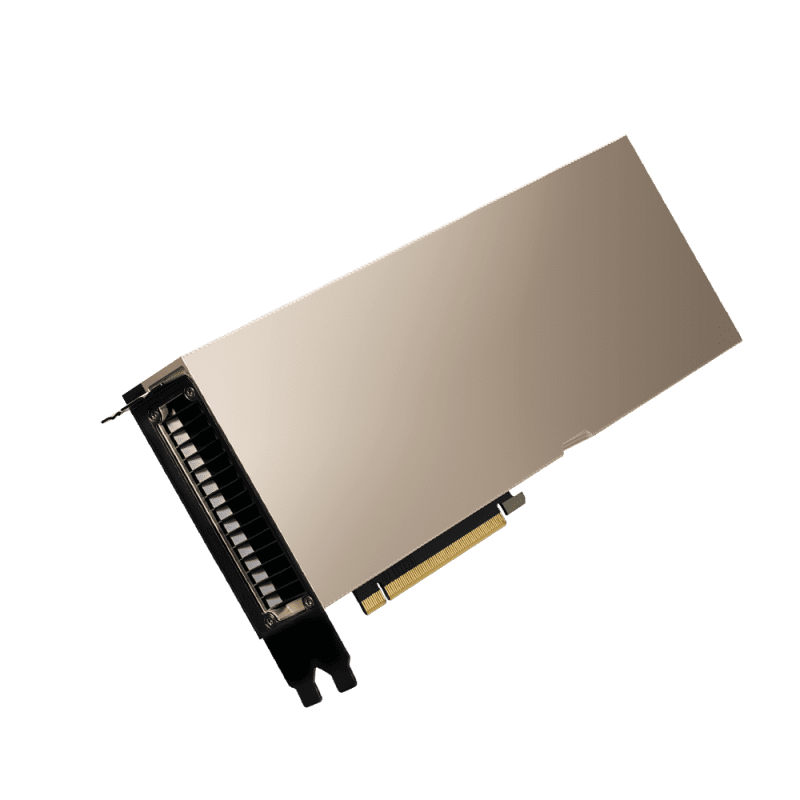

The dawn of the Nvidia A100 marks a seminal moment in modern computing, revolutionizing the landscape of data processing, AI, and high-performance computing (HPC). This groundbreaking Tensor Core GPU, a flagship product of Nvidia’s data center platform, is powered by the advanced Ampere Architecture, distinguishing itself as a pivotal innovation in GPU technology.

At its core, the A100 is engineered to deliver unparalleled acceleration across diverse computational scales. It addresses the escalating demands of AI, data analytics, and HPC, offering up to 20 times the performance of its predecessor. Such a leap is not just incremental; it’s transformative, reshaping what’s possible in data centers worldwide.

The Architectural Innovation: A Journey Beyond Predecessors

The A100’s architecture, rooted in the comprehensive NVIDIA Ampere Architecture, is an intricate mosaic of technological advancements. It comprises multiple GPU processing clusters, texture processing clusters, streaming multiprocessors (SMs), and HBM2 memory controllers. The A100 Tensor Core GPU embodies 108 SMs, each equipped with 64 FP32 CUDA Cores and four third-generation Tensor Cores, propelling it to achieve unprecedented processing power.

Asynchronous and Efficient: A New Paradigm in GPU Processing

The introduction of asynchronous copy and barrier technologies in the A100 marks a notable departure from traditional GPU processing methods. These features enable more efficient data transfer and synchronization between computing tasks, reducing power consumption and enhancing overall performance. This efficient utilization of resources is critical in large multi-GPU clusters and sophisticated computing environments.

Harnessing the Power of A100: Practical Applications and Use Cases

The practical applications of the A100 are as vast as they are impactful. One such domain is 3D object reconstruction in deep learning systems, where the A100’s formidable computing power can be leveraged to infer 3D shapes from 2D images. This capability is pivotal in fields ranging from criminal forensics to architectural restoration and medical imaging.

A Beacon for Future Computing: The A100’s Role in AI and HPC

The A100 is not just a GPU; it’s a harbinger of the future of AI and HPC. It’s designed to meet the challenges of increasingly complex AI models, such as those used in conversational AI, where massive compute power and scalability are non-negotiable. The A100’s Tensor Cores, coupled with technologies like NVLink and NVSwitch, enable scaling to thousands of GPUs, thereby achieving remarkable feats in AI training and inference.

In conclusion, the Nvidia A100 is more than just a technological marvel; it’s a catalyst for a new era in computing. Its profound impact on AI, HPC, and data analytics heralds a future where the boundaries of computational capabilities are continually expanded, driving innovations that were once deemed impossible.

Exploring the Ampere Architecture: The Heart of A100

The NVIDIA A100, powered by the revolutionary Ampere Architecture, represents a significant leap in GPU technology, offering a blend of efficiency, performance, and innovative features that redefine modern computing capabilities.

The Foundation: Ampere Architecture and Its Components

The Ampere Architecture is a testament to NVIDIA’s engineering prowess, incorporating several key components that enhance the performance and efficiency of the A100. The architecture is composed of multiple GPU processing clusters (GPCs), texture processing clusters (TPCs), and streaming multiprocessors (SMs), along with HBM2 memory controllers. The full implementation of the GA100 GPU, which is at the core of the A100, includes 8 GPCs, each with 8 TPCs, and a total of 128 SMs per full GPU. These architectural components are integral to the A100’s ability to handle complex computational tasks with unprecedented efficiency.

Asynchronous Operations and Error Management

One of the standout features of the Ampere Architecture is its enhanced capability for asynchronous operations. The A100 introduces a new asynchronous copy instruction that allows data to be loaded directly from global memory into SM shared memory, bypassing intermediate stages and reducing power consumption. This feature, along with asynchronous barriers and task graph acceleration, significantly improves the efficiency of data handling and task execution on the GPU.

Furthermore, the A100 includes new technology to improve error and fault detection, isolation, and containment. This enhancement is particularly beneficial in multi-GPU clusters and multi-tenant environments, ensuring maximum GPU uptime and availability.

SM Architecture and Tensor Cores

The Streaming Multiprocessor (SM) architecture of the A100 is a significant evolution over its predecessors, incorporating third-generation Tensor Cores that deliver enhanced performance for a wide range of data types. These Tensor Cores support FP16, BF16, TF32, FP64, INT8, INT4, and Binary, with new sparsity features that double the performance of standard operations. The A100’s Tensor Cores provide a substantial increase in computation horsepower, making it a powerhouse for deep learning and HPC applications.

Impact on Professional Visualization and Development

The Ampere Architecture’s influence extends beyond raw computational power. It plays a pivotal role in industries like architecture, engineering, construction, game development, and media & entertainment. The architecture supports a range of professional visualization solutions, including augmented and virtual reality, design collaboration, content creation, and digital twins. This versatility makes the Ampere Architecture an essential component for a wide array of professional applications, driving innovation and efficiency in various sectors.

Microbenchmarking and Instruction-level Analysis

Recent studies have delved into the microarchitecture characteristics of the Ampere Architecture, providing insights into its intricate design and operational nuances. This in-depth analysis, including microbenchmarking and instruction-level scrutiny, has revealed the architecture’s efficiencies and its potential impact on future GPU developments. The research in this area is continually evolving, shedding light on the intricate workings of the Ampere Architecture and its capabilities.

Developer Tools and Optimization

To harness the full potential of the Ampere Architecture, NVIDIA offers tools like Nsight Compute and Nsight Systems. These tools provide developers with detailed analysis and visualization capabilities to optimize performance and utilize the architecture’s features effectively. Features like roofline analysis in Nsight Compute and CUDA Graph node correlation in Nsight Systems enable developers to identify and address performance bottlenecks, making the most of the Ampere Architecture’s capabilities.

In summary, the NVIDIA Ampere Architecture, as embodied in the A100 GPU, represents a significant advancement in GPU technology. Its components, asynchronous operation capabilities, enhanced SM architecture, and support for professional applications, combined with developer tools for optimization, make it a cornerstone of modern high-performance computing and AI applications.

Performance Metrics: How A100 Transforms AI and HPC

The NVIDIA A100 Tensor Core GPU, powered by the Ampere Architecture, has significantly impacted the fields of Artificial Intelligence (AI) and High-Performance Computing (HPC). Its capabilities have transformed computational benchmarks, providing groundbreaking acceleration and efficiency.

Unprecedented Acceleration in AI and HPC

The A100 delivers an exceptional performance boost, up to 20 times higher than its predecessor, the NVIDIA Volta. This leap in performance is evident in AI training and deep learning inference. For instance, the A100 has shown remarkable efficiency in training complex AI models, such as those used in conversational AI, where it can solve workloads like BERT at scale in under a minute with 2,048 A100 GPUs. This achievement set a world record for time to solution.

Benchmarks and Comparative Performance

In benchmark testing, the A100 has demonstrated its superiority over previous generations and other GPUs. For example, in HPL (High-Performance Linpack) benchmarks, a configuration with four A100 GPUs outperformed the best dual CPU system by a factor of 14. This level of performance is particularly notable in the HPL-AI benchmark, which tests mixed-precision floating-point calculations typical in ML/AI model training. Here, configurations with two and four A100 GPUs showcased outstanding performance, achieving over 118 teraflops per second (TFLOPS) on a single node server.

Impact on Molecular Dynamics and Physics Simulations

The A100’s capabilities extend to various HPC applications, including molecular dynamics and physics simulations. In tests involving LAMMPS (a molecular dynamics package), NAMD (for simulation of large molecular systems), and MILC (for Lattice Quantum Chromodynamics), the A100 demonstrated significant improvements in processing times and throughput. These improvements are not only a testament to the A100’s raw computational power but also its ability to handle complex, large-scale simulations with greater efficiency.

Multi-Instance GPU (MIG) and NVLink Technology

The A100’s Multi-Instance GPU (MIG) feature allows it to be partitioned into up to seven isolated instances, each with its own resources. This versatility enables optimal utilization of the GPU for various applications and sizes. Additionally, the A100’s next-generation NVLink delivers twice the throughput of the previous generation, facilitating rapid communication between GPUs and enhancing the overall performance of multi-GPU configurations.

Enhanced AI Training and Inference

For AI training, the A100, with its Tensor Cores and Tensor Float (TF32) technology, offers up to 20X higher performance than the Volta GPUs. This performance boost is further enhanced by automatic mixed precision and FP16, making the A100 a robust solution for training large and complex AI models. In terms of AI inference, the A100 introduces features that optimize a range of precisions from FP32 to INT4, significantly accelerating inference workloads.

High-Performance Data Analytics and Server Platform

The A100 is not only a powerful tool for AI and HPC but also for high-performance data analytics. It provides the compute power, memory, and scalability necessary to analyze and visualize massive datasets efficiently. In a big data analytics benchmark, the A100 80GB delivered insights with 83X higher throughput than CPUs. Furthermore, the NVIDIA HGX A100 platform, incorporating the A100 GPUs, offers a powerful server solution for AI and HPC applications, enabling more efficient and flexible deployments in data centers.

In conclusion, the NVIDIA A100 GPU has set new standards in AI and HPC, offering unprecedented levels of performance and efficiency. Its influence spans a broad range of applications, from AI model training and inference to complex scientific simulations and data analytics, solidifying its position as a pivotal tool in modern computational tasks.

Advanced Features and Specifications of the A100

The NVIDIA A100 Tensor Core GPU, leveraging the Ampere Architecture, is a powerhouse in the realm of GPUs, designed to deliver exceptional performance for AI, data analytics, and high-performance computing. Let’s dive into its technical specifications and features to understand what sets the A100 apart.

Core Specifications

- GPU Architecture: The A100 is built on the NVIDIA Ampere GPU architecture, which is renowned for its vast array of capabilities, including more than 54 billion transistors on a 7-nanometer process.

- Clock Speeds: It features a base clock speed of 765 MHz and a boost clock of 1410 MHz. The memory clock operates at an effective rate of 2.4 Gbps.

- Memory Specifications: The A100 comes with a massive 40 GB of HBM2e memory, providing a memory bandwidth of 1,555 GB/s. This high-bandwidth memory is crucial for handling large datasets and complex computational tasks efficiently.

Performance and Capabilities

- Shading Units, TMUs, and ROPs: It boasts 6912 shading units, 432 TMUs, and 160 ROPs, along with 108 SMs (Streaming Multiprocessors), which contribute to its immense processing power.

- Tensor Cores: The A100 features 432 third-generation Tensor Cores that deliver 312 teraFLOPS of deep learning performance, marking a 20X improvement in Tensor FLOPS and Tensor TOPS for deep learning training and inference compared to NVIDIA Volta GPUs.

- NVLink and NVSwitch: The A100’s next-generation NVLink technology offers 2X higher throughput compared to the previous generation. When combined with NVSwitch, it allows up to 16 A100 GPUs to be interconnected, maximizing application performance on a single server.

Multi-Instance GPU (MIG)

- A standout feature of the A100 is its Multi-Instance GPU capability, which allows a single A100 GPU to be partitioned into as many as seven separate, fully isolated GPU instances. This feature is pivotal in optimizing GPU utilization and expanding access to various applications and users.

Structural Sparsity

- The A100 introduces a structural sparsity technique, a novel efficiency method that leverages the inherently sparse nature of AI mathematics. This feature doubles the performance of the GPU by reducing unnecessary computational overhead.

High-Bandwidth Memory (HBM2E)

- With up to 80 GB of HBM2e, the A100 delivers the world’s fastest GPU memory bandwidth of over 2TB/s. This feature, combined with a DRAM utilization efficiency of 95%, ensures that the A100 can handle the most demanding data-intensive tasks.

Applications and Industry Impact

The A100’s blend of high performance, memory capabilities, and advanced features like MIG and structural sparsity make it an ideal choice for a variety of demanding applications. From deep learning training and inference to large-scale scientific simulations and data analytics, the A100 is designed to accelerate the most complex computational tasks and provide groundbreaking results in various fields.

In summary, the NVIDIA A100 stands as a technological marvel in the GPU landscape, offering unprecedented performance and flexibility. Its advanced features cater to a wide range of applications, making it a crucial component in pushing the boundaries of AI, HPC, and data analytics.

Practical Applications: Where A100 Shines

The NVIDIA A100 GPU, with its robust technical capabilities, has found significant applications across various sectors, revolutionizing the way computational tasks are approached and executed.

Enhancing AI Training and Computer Vision

The A100 has made notable strides in the field of AI training, particularly in deep learning applications. Its support for the TF32 data format has dramatically accelerated the training of deep learning models. For instance, the A100’s TF32 mode has shown to offer up to 10x throughput compared to single-precision floating-point math (FP32) on older Volta GPUs. This efficiency boost is evident in applications like semantic segmentation and Bi3D networks, where the A100 has achieved speedups of 1.6X and 1.4X, respectively, without any code changes necessary from developers.

High-Performance Computing (HPC) Applications

The A100 GPU’s enhanced memory and computational capabilities have significantly benefited HPC applications. For example, in recommender system models like DLRM, which involve massive data tables representing billions of users and products, the A100 80GB delivers up to a 3x speedup, allowing businesses to retrain these models quickly for highly accurate recommendations. Additionally, for scientific applications such as weather forecasting and quantum chemistry simulations, the A100 80GB has been shown to achieve nearly 2x throughput gains in applications like Quantum Espresso.

Advancements in Data Analytics

In the realm of big data analytics, particularly in the terabyte-size range, the A100 80GB has demonstrated its prowess by boosting performance up to 2x. This improvement is crucial for businesses that require rapid insights from large datasets, allowing key decisions to be made in real time as data is updated dynamically.

Impact on Cloud Computing and Edge AI

The A100’s Multi-Instance GPU (MIG) capability has made it a versatile tool in cloud computing environments. This feature enables the partitioning of a single A100 GPU into as many as seven independent GPU instances, each with its own memory, cache, and compute cores. This allows for secure hardware isolation and maximizes GPU utilization for various smaller workloads, providing a unified platform for cloud service providers to dynamically adjust to shifting workload demands.

Revolutionizing Input Pipeline in Computer Vision

The A100 GPU has introduced several features for speeding up the computer vision input pipeline, such as NVJPG for hardware-based JPEG decoding and NVDEC for video decoding. These features address the input bottleneck in deep learning training and inference for images and videos, enabling accelerated data preprocessing tasks to run in parallel with network training tasks on the GPU. Such advancements have significantly boosted the performance of computer vision applications.

In summary, the NVIDIA A100 GPU, through its advanced features and robust performance, is driving significant advancements across AI training, HPC, data analytics, cloud computing, and computer vision. Its ability to handle massive datasets, accelerate computational tasks, and provide versatile solutions for a range of applications, marks it as a cornerstone technology in the modern computational landscape.

Comparative Analysis: A100 Against Other GPUs

The world of GPUs is constantly evolving, with each new model bringing more advanced capabilities. A prime example is the comparison between NVIDIA’s A100 and its successors, the H100 and H200, as well as other contemporaries like the V100. Each GPU serves specific purposes, and understanding their differences is crucial for professionals in fields like AI, deep learning, and high-performance computing.

A100 vs. H100 vs. H200

- Memory: The A100’s 80 GB HBM2 memory competes directly with the H100’s 80 GB HBM2 memory. The H200 steps up the game with revolutionary HBM3 memory, indicating a leap in performance and efficiency.

- Inference Performance: The H100 shows a substantial performance lead over the A100, especially with optimizations like TensorRT-LLM. For example, the H100 is around 4 times faster than the A100 in inference tasks for models like GPT-J 6B and Llama2 70 B.

- Power Efficiency: The A100 maintains a balance between performance and power consumption, which is crucial for total cost of ownership (TCO). The H200 is expected to further refine this balance, enhancing AI computing efficiency.

A100 vs. V100

- Language Model Training: The A100 has been observed to be approximately 1.95x to 2.5x faster than the V100 in language model training using FP16 Tensor Cores. This indicates the A100’s superior efficiency in handling complex deep learning tasks.

- Technical Specifications: The A100 marks a significant improvement over the V100 in terms of tensor core performance and memory bandwidth. It utilizes the TSMC 7nm process node, which enhances its deep learning performance focus.

Usage Scenarios for A100 and H100

- A100: Ideal for deep learning and AI training, AI inference, HPC environments, data analysis, and cloud computing. It excels in handling large neural networks and complex scientific simulations.

- H100: Stands out in LLM and Gen-AI research, numerical simulations, molecular dynamics, and HPC clusters. It is optimized for applications involving climate modeling, fluid dynamics, and finite element analysis.

Performance and Price

- Performance: The A100 shines in deep learning, offering high memory bandwidth and large model support. The H100, while slightly behind in memory capacity compared to the A100, is more suitable for graphics-intensive tasks and offers good value for money.

- Power Efficiency: A100 operates at a lower power consumption compared to H100, making it more energy-efficient overall.

- Price and Availability: The A100, being a high-end option, commands a premium price. In contrast, the H100 offers a more budget-friendly choice for users who do not require the top-tier features of the A100.

Conclusion

The selection between A100, H100, and H200 depends largely on specific needs and budget constraints. The A100 remains a strong contender in AI and deep learning tasks, while the H100 and H200 bring advancements in efficiency and performance, particularly in large model handling and graphics-intensive applications. The choice ultimately hinges on the specific requirements of the task at hand, balancing factors like performance, power efficiency, and cost.

Newsletter

You Do Not Want to Miss Out!

Step into the Future of Model Deployment. Join Us and Stay Ahead of the Curve!

![The 4 Major Corporate Fears About AI [and How Arkane Crushes Them]](https://arkanecloud.com/wp-content/uploads/elementor/thumbs/image-fears-r83x02fmwkg0873grkx2f5jvdsy2k1b00h5jqz1vi0.png)