Nvidia L40S price

Introduction

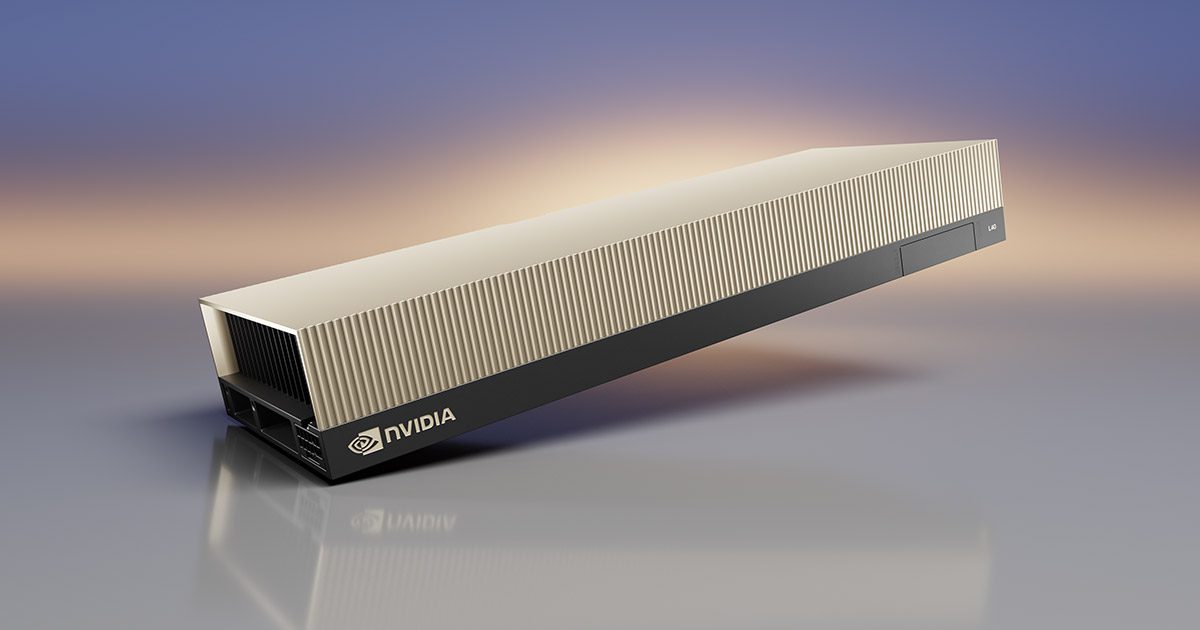

The Nvidia L40S GPU, a paragon of technological advancement in the realm of graphics processing units, marks a significant stride in the evolution of GPU technology. Developed on the groundbreaking Ada Lovelace Architecture, the L40S distinguishes itself from its predecessors and competitors through several key advancements. These enhancements are not just incremental improvements; they represent a paradigm shift in GPU design and capabilities.

Central to the L40S’s design is the Ada Lovelace Architecture, which introduces a more efficient instruction set, optimized data paths, and enhanced memory hierarchies. This sophisticated architecture is engineered to maximize throughput while minimizing latency, a critical factor in high-performance computing environments. The advancements in the architecture ensure that the L40S delivers unparalleled efficiency and speed, setting a new benchmark in the industry.

The L40S is not just a powerhouse in terms of raw performance; it’s a versatile tool designed to cater to a wide range of applications. It adeptly combines powerful AI compute capabilities with best-in-class graphics and media acceleration. This versatility makes it an ideal choice for powering next-generation data center workloads. From generative AI and large language model (LLM) inference and training to 3D graphics, rendering, and video processing, the L40S is equipped to handle diverse and demanding applications.

One of the standout features of the L40S is its significant performance leap compared to its predecessors. When utilized in specific applications like robotic simulations with Isaac Sim, the L40S demonstrates a performance that is twice as fast as the A40 GPU. This generational leap in acceleration is not confined to a narrow set of tasks; it extends across a broad spectrum of AI and computing workloads. The L40S GPUs are particularly effective in generative AI workloads, capable of fine-tuning large language models within hours and facilitating real-time inferencing for various applications, ranging from text-to-image conversions to chat functionalities.

Crafted specifically for NVIDIA OVX servers, the L40S stands out as a robust solution for data centers. Its ability to adeptly handle a myriad of complex applications, including AI training, inferencing, intricate 3D designs, visualizations, and video processing, makes it a top choice for high-end data center operations. This capability underscores the L40S’s position as not just a component, but a comprehensive solution for advanced computing needs.

Overview of Nvidia L40S GPU

Technical Specifications

The Nvidia L40S GPU is a technological marvel, engineered with the Ada Lovelace architecture. This architecture is a cornerstone of its advanced capabilities, providing the GPU with a formidable foundation. The L40S features a substantial 48GB GDDR6 memory with ECC, ensuring both high capacity and error correction for reliability. The memory bandwidth is an impressive 864GB/s, facilitating rapid data transfer and processing. Furthermore, the L40S GPU operates with a PCIe Gen4x16 interconnect interface, allowing a bi-directional speed of 64GB/s, crucial for high-speed data exchange in intensive computing environments.

The L40S’s core structure comprises 18,176 shading units, 568 texture mapping units, and 192 ROPs (Render Output Units), which collectively contribute to its exceptional rendering capabilities. Adding to its prowess are 568 tensor cores, specifically designed to enhance the speed of machine learning applications. This is complemented by 142 raytracing acceleration cores, offering superior ray-tracing performance for highly realistic visual effects.

Performance Capabilities

The L40S’s operating frequency starts at 1110 MHz and can be boosted up to an impressive 2520 MHz. Its memory operates at a frequency of 2250 MHz (18 Gbps effective), ensuring efficient handling of large datasets and complex computations. The GPU’s dual-slot design is powered by a 1x 16-pin power connector, with a maximum power draw of 300 W. In terms of display outputs, the L40S is equipped with 1x HDMI 2.1 and 3x DisplayPort 1.4a, offering flexible connectivity options for various display setups.

The L40S GPU boasts third-generation RT Cores and an industry-leading 48 GB of GDDR6 memory, delivering up to twice the real-time ray-tracing performance compared to the previous generation. This leap in performance is crucial for accelerating high-fidelity creative workflows, including real-time, full-fidelity, interactive rendering, 3D design, video streaming, and virtual production.

At its core, the Ada Lovelace Architecture integrates new Streaming Multiprocessors, 4th-Gen Tensor Cores, and 3rd-Gen RT Cores. This architecture enables the L40S to achieve 91.6 teraFLOPS FP32 performance, propelling its capabilities in Generative AI, LLM Training, and Inference. Features like the Transformer Engine – FP8 and over 1.5 petaFLOPS Tensor Performance, along with a Large L2 Cache, further amplify its performance, making it a powerhouse for a wide range of applications.

Pricing with Arkane Cloud

Arkane Cloud will offer L40S for pricing at $2.5/hr.

Comparison with Previous Models

The NVIDIA L40S, poised for release by the end of 2023, represents a significant leap in GPU technology. Its predecessor, the L40, already made notable strides in the market, but the L40S is set to elevate performance and versatility to unprecedented levels. Its design, rooted in the Ada Lovelace architecture, positions it as the most formidable universal GPU for data centers, catering to a broad spectrum of needs from AI training to handling Large Language Models (LLMs) and accommodating various workloads.

Competitive Analysis

Performance Comparison with A100 and H100

In comparing the L40S with NVIDIA’s A100 and H100 GPUs, several key aspects emerge. While the A100, available in 40GB and 80GB versions, and the H100, with its H100 SXM, H100 PCIe, and H100 NVL versions, cater to specific use-cases, the L40S is anticipated to be released in a single version. This streamlined approach suggests a focus on versatility and broad applicability.

Theoretical vs Practical Performance

It’s important to note that while the L40S’s theoretical capabilities are impressive, its real-world performance is yet to be tested. This contrasts with the A100 and H100, which have undergone extensive testing and proven their reliability and performance. Therefore, while the L40S promises groundbreaking features, the practical application and performance of this GPU remain to be seen in real-world scenarios.

Key Features and Versatility

The L40S stands out for its versatility, capable of handling a wide range of workloads. Its high computational power makes it ideal for AI and ML training, data analytics, and even advanced graphics rendering. This versatility extends to various industries, including healthcare, automotive, and financial services. A notable feature of the L40S is its user-friendly design, which simplifies integration into existing systems without the need for specialized knowledge or extensive setup.

Comparative Analysis of Features

When compared to the A100 and H100, the L40S demonstrates clear advantages in certain areas:

- Computational Power: The L40S outperforms the A100 in FP64 and FP32 performance, making it a superior choice for high-performance computing tasks. However, the H100 series, particularly the H100 NVL, shows an even more significant leap in computational power in these metrics.

- Memory and Bandwidth: The L40S uses GDDR6 memory, whereas the A100 offers HBM2e memory, and the H100 series steps up with HBM3, providing the highest memory bandwidth among the three.

- Tensor and RT Cores: Unique to the L40S among these models is the inclusion of RT Cores, enhancing its capability for real-time ray tracing. All three models, however, include Tensor Cores, essential for AI and machine learning tasks.

- Form Factor and Thermal Design: The L40S and A100 share similarities in form factor and thermal design, but the H100 series exhibits more flexibility, particularly for power-constrained data center environments.

- Additional Features: All three models offer virtual GPU software support and secure boot features. The L40S and H100 series go further with NEBS Level 3 readiness, making them more suitable for enterprise data center operations.

Applications in Professional Settings

Data Center Optimization

The NVIDIA L40S GPU, with its advanced capabilities, is primed for revolutionizing data center operations. It’s designed for 24/7 enterprise data center environments, ensuring maximum performance, durability, and uptime. The L40S meets the latest data center standards, including Network Equipment-Building System (NEBS) Level 3 readiness, and incorporates secure boot with root of trust technology. This positions the L40S as a reliable and secure option for data centers, providing an additional layer of security essential in today’s digitally-driven landscape.

Use Cases in AI and Machine Learning

Generative AI and Large Language Model (LLM) Training and Inference

The L40S GPU excels in the domain of AI, particularly in generative AI and LLMs. Its fourth-generation Tensor Cores, supporting FP8, offer exceptional AI computing performance, accelerating both training and inference of cutting-edge AI models. This capability is crucial for enterprises looking to leverage AI for innovative applications and services.

Enhanced Graphics and Media Acceleration

The L40S’s enhanced throughput and concurrent ray-tracing and shading capabilities significantly improve ray-tracing performance. This advancement is pivotal for applications in product design, architecture, engineering, and construction, enabling lifelike designs with hardware-accelerated motion blur and real-time animations.

Breakthrough Multi-Workload Performance

Combining powerful AI compute with superior graphics and media acceleration, the L40S is built to handle the next generation of data center workloads. It delivers up to 5X higher inference performance than its predecessor, the NVIDIA A40, and 1.2X the performance of the NVIDIA HGX A100, making it ideal for accelerating multimodal generative AI workloads.

Advanced Rendering and Real-Time Virtual Production

With its third-generation RT Cores, the L40S offers up to twice the real-time ray-tracing performance of the previous generation. This capability is crucial for creating stunning visual content and high-fidelity creative workflows, including interactive rendering and real-time virtual production.

Supporting Industrial Digitalization

Through NVIDIA Omniverse, the L40S GPU facilitates the creation and operation of industrial digitalization applications. Its powerful RTX graphics and AI capabilities make it a robust platform for Universal Scene Description (OpenUSD)-based 3D and simulation workflows, essential in the evolving landscape of industrial digitalization.

Conclusion

The NVIDIA L40S GPU represents a significant milestone in the evolution of GPU technology, especially for professional developers and tech enthusiasts. Its introduction into the market is not just a testament to NVIDIA’s commitment to innovation, but also a signal of the rapidly advancing landscape of computational technology. The L40S, with its array of advanced features and capabilities, is set to redefine the standards for GPU performance, versatility, and efficiency.

The significance of the L40S lies in its ability to address a wide spectrum of needs across various industries. From accelerating AI and machine learning workloads to enhancing data center operations, the L40S is more than just a GPU; it’s a comprehensive solution for the most demanding computational tasks. Its adoption is poised to drive advancements in fields such as AI, 3D rendering, and industrial digitalization, further fueling the technological revolution that is shaping the future.

This article aimed to provide a deep dive into the NVIDIA L40S GPU, exploring its technical specifications, performance capabilities, market position, pricing dynamics, and applications in professional settings. As the GPU market continues to evolve, the L40S stands as a beacon of what is possible, offering a glimpse into the future of computing and its potential to transform industries.

In conclusion, the NVIDIA L40S GPU is not just a significant addition to NVIDIA’s lineup but a landmark development in the world of GPUs. It epitomizes the pinnacle of current GPU technology, setting new benchmarks in performance, versatility, and efficiency, and paving the way for the next generation of computational advancements. For professional developers and tech enthusiasts, the L40S is a symbol of the exciting possibilities that lie ahead in the realm of high-performance computing.

Newsletter

You Do Not Want to Miss Out!

Step into the Future of Model Deployment. Join Us and Stay Ahead of the Curve!

![The 4 Major Corporate Fears About AI [and How Arkane Crushes Them]](https://arkanecloud.com/wp-content/uploads/elementor/thumbs/image-fears-r83x02fmwkg0873grkx2f5jvdsy2k1b00h5jqz1vi0.png)