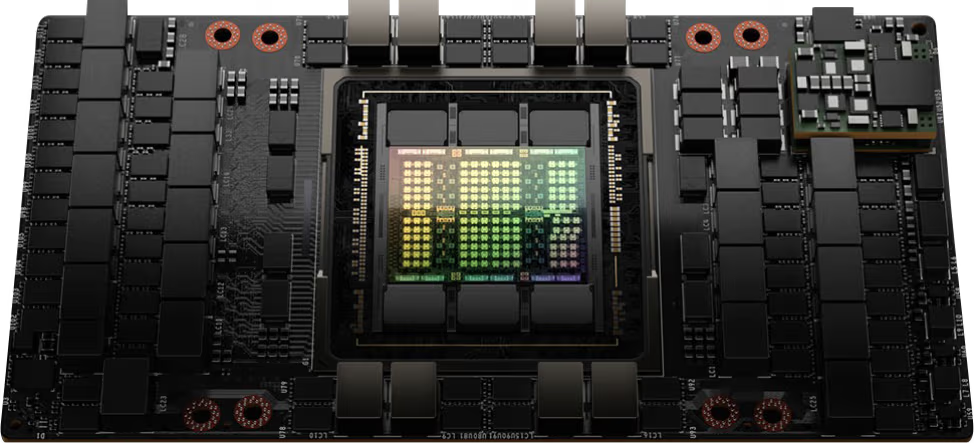

NVIDIA H100

High-performance GPU designed for pioneering LLM experimentation, production-grade inference serving, and transformative AI model innovation.

Tailored for organizations deploying large-scale LLMs with industry-leading speed and cost-effective efficiency.

Powerful Features to Build & Scale AI applications

Trusted by 1,000+ AI startups, labs and enterprises.

Industry-leading GPU for AI acceleration

Ideal uses cases for the NVIDIA H100 GPU

Explore NVIDIA H100's breakthrough capabilities in production AI inference, cutting-edge deep learning development, and high-performance computing applications worldwide.

AI Inference

Optimize AI serving workloads with NVIDIA H100's streamlined inference capabilities. Revolutionary Tensor Core design and high-throughput memory deliver predictable low-latency performance for production applications handling massive user traffic.

AI Training

Supercharge AI research with H100's optimized training performance. Transformer Engine acceleration achieve faster model convergence, while high-capacity memory supports training of next-generation architectures previously beyond reach.

Finance

Financial institutions leverage NVIDIA H100 to revolutionize algorithmic trading and risk assessment. High-frequency trading firms use H100's lightning-fast inference for real-time market analysis and decision-making, while investment banks deploy its training capabilities to develop sophisticated models for portfolio optimization, fraud detection, and regulatory compliance reporting at unprecedented speed and accuracy.

Instances That Scale With You

Find the perfect instances for your need. Flexible, transparent, and packed with powerful API to help you scale effortlessly.

| GPU model | GPU | CPU | RAM | VRAM | On-demand Pricing | Reserve pricing |

|---|---|---|---|---|---|---|

| NVIDIA H100 | 1 | 32 | 185 | 80 | $3.99/hr | from $1.65/hr/GPU |

| NVIDIA H100 | 2 | 80 | 370 | 160 | $7.98/hr | from $1.65/hr/GPU |

| NVIDIA H100 | 4 | 176 | 740 | 320 | $15.96/hr | from $1.65/hr/GPU |

| NVIDIA H100 | 8 | 176 | 1480 | 640 | $31.92/hr | from $1.65/hr/GPU |