Rent GPUs for Deep Learning and Neural Network Training

Understanding GPU Rentals and Their Significance in AI

GPUs, or Graphics Processing Units, have become indispensable in the realm of high-performance computing, especially in fields like deep learning and neural network training. The surge in demand for these powerful processing units is driven by their ability to handle complex calculations and render images or 3D graphics efficiently. This has led to the rise of GPU rental services, offering a practical and cost-effective solution for accessing high-end computing resources without a hefty upfront investment.

Deep Learning and the Role of GPUs

Deep learning, a critical subset of machine learning, relies heavily on artificial neural networks, which mimic the human brain’s functioning. These neural networks learn from large datasets, making GPUs an ideal choice due to their parallel computation capabilities. The remarkable aspect of GPUs in deep learning is their performance speed, which can be up to 250 times faster than traditional CPUs. This speed is crucial for training complex neural networks, enabling quicker processing of vast datasets and more efficient model training.

Cloud GPUs: Revolutionizing Computing Resources

The advent of cloud computing has significantly enhanced the capabilities of GPUs. Cloud-based GPUs offer several advantages over traditional physical units:

- Scalability: Cloud GPUs can be scaled up or down to meet the evolving computational needs of an organization. This scalability ensures that resources are available as per demand, offering flexibility that physical GPUs cannot match.

- Cost-Effectiveness: Renting cloud GPUs is more economical than purchasing physical units. Organizations can opt for a pay-as-you-go model, which is particularly beneficial for those who do not require constant access to high-powered GPUs.

- Space and Resource Efficiency: Unlike physical GPUs, cloud GPUs do not consume local resources or space. This allows for the offloading of intensive computational tasks to the cloud, freeing up local systems for other uses.

- Time Efficiency: Tasks that once took hours or days can now be completed in a matter of minutes with cloud GPUs. This efficiency translates to higher productivity and more time for innovation.

Emerging Frontiers in GPU Utilization for Deep Learning

Deep learning has fundamentally transformed various research fields, including those vital to drug discovery like medicinal chemistry and pharmacology. This transformation is largely attributed to the advancements in highly parallelizable GPUs and the development of GPU-enabled algorithms. These technologies have been crucial in new drug and target discoveries, aiding in processes like molecular docking and pharmacological property prediction. The role of GPUs in deep learning signifies a shift towards more efficient exploration in the vast chemical universe, potentially speeding up the discovery of novel medicines.

Originally designed for accelerating 3D graphics, GPUs quickly gained recognition in the scientific community for their powerful parallel computing capabilities. The introduction of NVIDIA’s Compute Unified Device Architecture (CUDA) in 2007 marked a pivotal moment, enabling the efficient execution of computationally intensive workloads on GPU accelerators. This development catalyzed the use of GPUs in computational chemistry, streamlining calculations like molecular mechanics and quantum Monte Carlo.

September 2014 saw NVIDIA releasing cuDNN, a GPU-accelerated library for deep neural networks (DNNs), bolstering the training and testing processes in deep learning. This release, along with the development of platforms like AMD’s ROCm, has fostered an entire ecosystem of GPU-accelerated deep learning platforms. Such ecosystems support various machine learning libraries, marking significant strides in GPU programming and hardware architecture.

In the realm of computer-aided drug discovery (CADD), deep learning methods running on GPUs have addressed challenges like combinatorics and optimization, enhancing applications in virtual screening, drug design, and property prediction. GPUs’ parallelization capabilities have improved the timescale and accuracy of simulations in protein and protein-ligand complexes, significantly impacting the fields of bioinformatics, cheminformatics, and chemogenomics.

Deep learning architectures for CADD have diversified to include various models:

- Multilayer Perceptrons (MLPs): These networks form the basis of DNNs and have found early success in drug discovery for QSAR studies. Modern GPUs have made MLPs cost-effective for large cheminformatics datasets.

- Convolutional Neural Networks (CNNs): Primarily used for image and video processing, CNNs also show promise in biomedical text classification, operating on 3D volumes to generate translation-invariant feature maps.

- Recurrent Neural Networks (RNNs): RNNs extend Markov chains with memory, capable of learning long-range dependencies and modeling autoregression in molecular sequences.

- Variational Autoencoders (VAEs): These generative models have revolutionized cheminformatics, enabling the probabilistic learning of latent space for new molecule generation.

- Generative Adversarial Networks (GANs): GANs, with their adversarial game between generator and discriminator modules, have found utility in drug discovery to synthesize data across subproblems.

- Transformer Networks: Inspired by their success in natural language processing, transformer networks in drug discovery focus on training long-term dependencies for sequences.

- Graph Neural Networks (GNNs): GNNs leverage neural message parsing to generate robust representations, particularly useful in the graphical structure of molecules for CADD.

- Reinforcement Learning: This AI branch, combined with deep learning, has found applications in de novo drug design, enabling the generation of molecules with desired properties.

These advancements in GPU-enabled deep learning are promoting open science and democratizing drug discovery. The integration of DL in CADD is enhancing data-sharing practices and encouraging the use of public cloud services to reduce costs in drug discovery processes.

Future Prospects of GPUs in Deep Learning

Deep learning, a cornerstone of modern artificial intelligence (AI), owes much of its advancement to Graphics Processing Units (GPUs). GPUs, with their unparalleled computational power and efficiency, are ideally suited for deep learning tasks, from intricate dataset processing to complex machine learning models. The transition from traditional CPU-based systems to GPU-powered ones has revolutionized applications such as object and facial recognition, offering accelerated speeds and enhanced capabilities.

The recent explosion in the use of GPUs for deep learning highlights their growing importance in technology. This surge is characterized by two significant trends:

- Cloud-Based GPU Services: The emergence of cloud GPUs has democratized access to high-powered computing resources. These cloud services allow users with basic hardware, like laptops or mobile devices, to leverage the power of GPUs on-demand. This approach is especially beneficial in sectors like finance and healthcare, where data privacy is paramount. Cloud-based GPUs also offer scalability and cost efficiency, enabling businesses to build and share machine learning models at scale.

- Dedicated Hardware for Deep Learning: Another key advancement is the development of dedicated hardware specifically for deep learning tasks. This hardware allows for simultaneous parallelized computing, significantly boosting data processing speeds. As a result, businesses are rapidly integrating GPUs into their workflows, achieving faster and more cost-effective model iterations.

Looking ahead, we can anticipate several emerging trends:

- GPU Cloud Server Solutions: High-capacity GPU farms available via the internet will provide developers with on-demand access to powerful GPU capabilities. This trend is likely to democratize deep learning further, making advanced computational resources accessible to a broader range of users and developers.

- Specialized Hardware and Software: The future will likely see the development of hardware and software tailored specifically for GPU-based deep learning applications. This specialization could optimize the efficiency of popular frameworks like TensorFlow and PyTorch, propelling deep learning capabilities to new heights.

In summary, GPUs are set to continue as a driving force in AI, shaping the future of the industry with cloud-based solutions and dedicated hardware designed for deep learning. This evolution will undoubtedly lead to more innovative and efficient AI applications, pushing the boundaries of what’s possible in artificial intelligence.

Expanding Horizons: GPUs in AI Beyond Deep Learning

The transformative potential of Artificial Intelligence (AI) and Machine Learning (ML) in reshaping industries is undeniable, with predictions suggesting a global AI economy growth to trillions of dollars. The success of modern AI and ML systems largely hinges on their capability to process vast amounts of data using GPUs. These systems, particularly in computer vision (CV), have evolved from rule-based to data-driven ML paradigms, heavily relying on GPU-based hardware for processing huge volumes of data.

Why GPUs Excel in AI Tasks

GPUs, initially developed for the gaming industry, are adept at handling parallel computations, crucial for AI tasks like linear algebra operations. Their architecture is especially suitable for the intensive computations of deep neural networks, which involve matrix multiplications and vector additions, making GPUs the ideal hardware for these tasks.

Diverse Applications of GPUs in AI

- Autonomous Driving: Autonomous vehicles employ an array of sensors to gather data, requiring sophisticated AI for object detection, classification, segmentation, and motion detection. GPUs are pivotal in processing this data rapidly, enabling high-probability decision-making essential for autonomous driving.

- Healthcare and Medical Imaging: In medical imaging, GPUs aid in analyzing vast datasets of images for disease identification. The ability of GPUs to process these large datasets efficiently makes them invaluable in healthcare, especially when the training data is sparse, like in rare disease identification.

- Disease Research and Drug Discovery: GPUs have played a significant role in computational tasks like protein structure prediction, which are fundamental in disease research and vaccine development. A notable example is AlphaFold by DeepMind, which predicts protein structures from genetic sequences, a task infeasible with conventional hardware.

- Environmental and Climate Science: Climate modeling and weather prediction, crucial in understanding and combating climate change, are incredibly data-intensive tasks. GPUs contribute significantly to processing the vast amounts of data required for these models.

- Smart Manufacturing: In the manufacturing sector, AI and ML have revolutionized the management of data from various sources like workers, machines, and logistics. GPUs are instrumental in processing this data for applications in design, quality control, supply chain management, and predictive maintenance.

In summary, GPUs are extending their influence far beyond traditional deep learning tasks, playing a critical role in various AI applications across industries. Their ability to handle large-scale, parallel computations makes them indispensable in the rapidly evolving landscape of AI and ML.

GPUs Driving Innovations Beyond Deep Learning

The application of GPUs in AI extends beyond deep learning into various fields, revolutionizing the way we approach complex problems and processing massive datasets. Two notable areas where GPUs are making significant contributions are environmental and climate science, and smart manufacturing.

- AI and ML in Environmental and Climate Science:

- Climate change presents a profound challenge, requiring immense scientific data, high-fidelity visualization, and robust predictive models. Weather prediction and climate modeling are critical in the fight against climate change, relying on comprehensive models like the Weather Research and Forecasting (WRF) system in the U.S. These models, dealing with complex interrelationships of meteorological variables, are intractable for conventional hardware. GPUs are essential in processing these large-scale, intricate models, enabling more accurate and efficient climate and weather predictions.

- AI and ML in Smart Manufacturing:

- The manufacturing industry has undergone a revolution with the advent of computing and information technology. The efficient movement of materials and products, controlled precisely in conjunction with various processes, is central to modern manufacturing systems. The exponential decrease in the cost and complexity of computing and storage has led to a surge in data generation. AI and ML, powered by GPUs, are rescuing manufacturing organizations from this data deluge, aiding in design, quality control, machine optimization, supply chain management, and predictive maintenance. Deep learning technologies are being utilized across these domains, showcasing the versatility and power of GPU-based solutions in transforming traditional industries into smart manufacturing powerhouses.

In summary, GPUs are not just limited to deep learning applications but are also pivotal in tackling global challenges like climate change and revolutionizing industries like manufacturing. Their ability to handle complex computations and large datasets efficiently makes them an invaluable asset in a wide range of AI and ML applications.

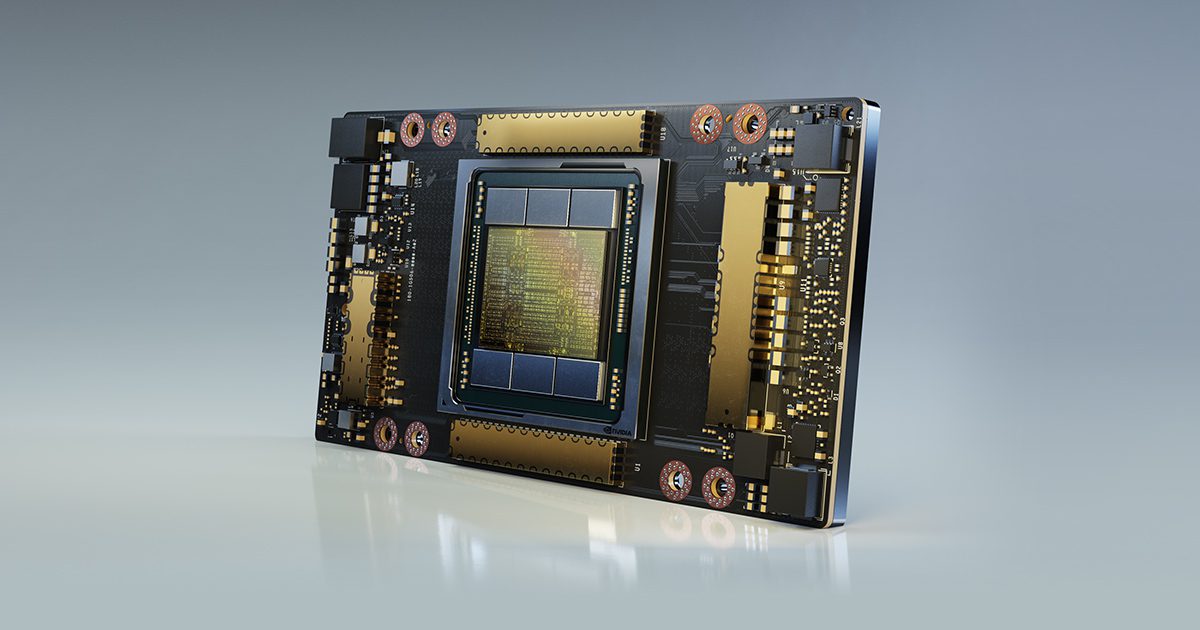

The Evolution and Impact of Nvidia’s H200 GPU in AI

The unveiling of Nvidia’s H200 GPU marks a significant milestone in the field of artificial intelligence (AI) and computing. This advanced GPU represents a considerable upgrade from its predecessor, the H100, which played a pivotal role in training sophisticated AI models like OpenAI’s GPT-4. The H200 GPU is not merely a technological advancement; it’s a transformative force in the booming AI industry, catering to the escalating demands of diverse sectors, from large corporations to government agencies.

- Technological Leap from H100 to H200:

- The H100 GPU was a powerful tool in AI, crucial for developing complex models like GPT-4. However, the H200 represents a substantial leap forward, offering enhanced power, efficiency, and capabilities. A notable feature of the H200 is its 141GB of next-generation “HBM3” memory, which significantly improves the GPU’s performance, especially in inference tasks crucial for AI applications. This advancement indicates a shift from incremental improvements to a transformative enhancement in AI capabilities.

- Financial and Market Implications:

- The launch of the H200 GPU has positively impacted Nvidia’s financial standing and market perception. In 2023 alone, Nvidia’s stock surged by over 230%, reflecting the market’s confidence in the company’s AI technology. The H200 GPU’s introduction, with its enhanced capabilities, appeals to a broad spectrum of customers and positions Nvidia competitively against other industry players like AMD and its MI300X GPU. This competition spans beyond just power and memory capacity, encompassing energy efficiency, cost-effectiveness, and adaptability to various AI tasks. The H200’s launch signifies a long-term trend in the tech industry towards increasing investment in AI and machine learning technologies.

In essence, Nvidia’s H200 GPU is not just responding to current AI demands but is actively shaping the future of AI development. Its technical prowess and market impact underscore Nvidia’s commitment to advancing AI technology, redefining computational power and efficiency in a rapidly evolving digital era.

Latest Advancements in AI Using GPU Technology

The world of AI and GPU technology is witnessing a surge of advancements, particularly from NVIDIA, which is pushing the boundaries of generative AI and neural graphics. Here are some of the groundbreaking developments:

- Generative AI Models for Customized Imagery:

- NVIDIA’s research in generative AI models that turn text into personalized images is revolutionizing the way artists and developers create visual content. These models are capable of generating an almost infinite array of visuals from simple text prompts, enhancing creativity in fields like film, video games, and virtual reality.

- Inverse Rendering and 3D Object Transformation:

- NVIDIA has developed techniques to transform 2D images and videos into 3D objects, significantly accelerating the rendering process in virtual environments. This technology is particularly beneficial for creators who need to populate virtual worlds with realistic 3D models and characters.

- Real-Time 3D Avatar Creation:

- Collaborating with researchers at the University of California, San Diego, NVIDIA introduced a technology that can generate photorealistic 3D head-and-shoulders models from a single 2D portrait. This breakthrough makes 3D avatar creation and video conferencing more accessible and realistic, leveraging AI to run in real-time on consumer desktops.

- Lifelike Motion for 3D Characters:

- In a collaboration with Stanford University, NVIDIA researchers developed an AI system that can learn a range of skills from 2D video recordings and apply this motion to 3D characters. This system can accurately simulate complex movements, such as those in tennis, providing realistic motion without expensive motion-capture data.

- High-Resolution Real-Time Hair Simulation:

- NVIDIA has introduced a method using neural physics to simulate tens of thousands of hairs in high resolution and real-time. This technique, optimized for modern GPUs, greatly reduces the time needed for full-scale hair simulations, from days to mere hours, enhancing the quality of hair animations in virtual characters.

- Neural Rendering for Enhanced Graphics:

- NVIDIA’s research in neural rendering is bringing film-quality detail to real-time graphics, revolutionizing the gaming and digital twin industries. The company’s innovations in programmable shading, combined with AI models, allow developers to create more realistic textures and materials in real-time graphics applications.

- AI-Enabled Data Compression:

- NVIDIA’s NeuralVDB, an AI-enabled data compression technique, drastically reduces the memory needed to represent volumetric data like smoke, fire, clouds, and water. This development is a game-changer for rendering complex environmental effects in virtual environments.

- Advanced Neural Materials Research:

- NVIDIA’s ongoing research in neural materials is simplifying the rendering of photorealistic, many-layered materials. This research is enabling up to 10x faster shading, making the process of creating complex material textures more efficient and realistic.

These advancements demonstrate NVIDIA’s commitment to enhancing AI capabilities using GPU technology, pushing the limits of what’s possible in virtual environments and AI-powered applications.

Jun 17,2024

Jun 17,2024  By Julien Gauthier

By Julien Gauthier